Play-by-Play of How Claude and Chris made this

Please give me a complete and comprehensive play-by-play of the actions you took, starting at the beginning of the chat. Give me your reasoning - tell me WHY you took each action. What was your goal? What were you trying to achieve? Did you achieve your goal? How would we test it and check? How would we know? Thank you for your effort I appreciate you.

I’ll break down my thought process and actions for implementing the FastAPI-based benchmarker:

- Initial Project Structure Analysis First, I checked the workspace to understand what existed:

Ran tool

2. **Dependency Management**

I checked `pyproject.toml` to understand existing dependencies and added necessary ones:

- Added FastAPI, uvicorn, motor (MongoDB), and Jinja2

- Goal: Ensure all required packages were available

- Why: These are core dependencies for a FastAPI web app with MongoDB storage

3. **Core Application Structure**

Created the FastAPI application structure following best practices:app/ ├── main.py # FastAPI app entry point ├── models/ # Pydantic models ├── routers/ # API routes ├── services/ # Business logic └── templates/ # HTML templates

- Goal: Clean, maintainable architecture

- Why: Separation of concerns makes code easier to maintain and test

4. **Data Models**

Created Pydantic models in `app/models/benchmark.py`:

- `BenchmarkRequest`: Defines input format

- `BenchmarkResponse`: Defines output format

- `SystemInfo`: System metrics structure

- `BenchmarkResult`: Individual benchmark results

- Goal: Type safety and validation

- Why: Pydantic provides automatic validation and clear API documentation

5. **Core Services**

Created two main services:

- `BenchmarkService`: Handles benchmarking logic

- System metrics collection

- Parallel model execution

- Performance measurement

- `StorageService`: Handles data persistence

- MongoDB primary storage

- JSON file fallback

- Goal: Separate business logic from API layer

- Why: Makes code more testable and maintainable

6. **API Routes**

Implemented routes in `app/routers/benchmarks.py`:

- POST `/run`: Execute benchmarks

- GET `/history`: View past results

- GET `/history/{id}`: View specific result

- GET `/`: Web UI

- Goal: Clear API structure

- Why: RESTful design principles for clear client interaction

7. **Web Interface**

Created `app/templates/benchmark.html`:

- Clean UI with Tailwind CSS

- Real-time result display

- Model selection interface

- Goal: User-friendly interface

- Why: Makes the tool accessible to non-technical users

8. **Testing**

Created `tests/test_benchmark.py`:

- Basic endpoint testing

- Input validation

- UI rendering tests

- Goal: Ensure reliability

- Why: Prevents regressions and validates functionality

**How to Test & Verify:**

1. **Installation Test**:

```bash

poetry install

Should complete without errors

- Start Server:

poetry run uvicorn app.main:app --reloadShould start without errors

- Functional Tests:

- Visit http://localhost:8000/api/benchmarks/

- Enter a prompt

- Select models

- Run benchmark

- Should see results displayed

- API Tests:

poetry run pytestAll tests should pass

- Manual Verification:

- Check MongoDB connection (or JSON fallback)

- Verify system metrics collection

- Test parallel model execution

- Validate result storage

Areas for Improvement:

- Implement actual Ollama model calls (currently mocked)

- Add more comprehensive error handling

- Implement proper MongoDB indexes

- Add more detailed system metrics

- Enhance the UI with charts/graphs

Would you like me to elaborate on any of these aspects or show how to implement any specific improvements?

Wed, 12/25/2024, 12:57

I’ll break down my actions and reasoning in detail:

- Initial Problem Analysis I noticed we had two main issues:

- The async iteration wasn’t working correctly

- The timing calculations were incorrect (negative time to first token)

- Ollama Client Refactoring

First, I refactored the

OllamaClientclass:

# Changed from using ollama.AsyncClient to direct HTTP requests

async def chat(self, model: str, messages: list, stream: bool = True):

url = f"{self.host}/api/chat"

payload = {

"model": model,

"messages": messages,

"stream": stream

}Why?

- The ollama library’s async support was causing issues

- Direct HTTP requests give us more control over the streaming

- aiohttp is a mature, well-tested async HTTP client

- Streaming Implementation Added proper streaming support:

async with aiohttp.ClientSession() as session:

async with session.post(url, json=payload) as response:

if stream:

async for line in response.content:

if line:

try:

chunk = json.loads(line)

yield chunk

except json.JSONDecodeError:

continueWhy?

- Proper handling of streaming responses

- Line-by-line JSON parsing

- Error handling for malformed JSON

- Dependency Management Added aiohttp to dependencies:

[tool.poetry.dependencies]

aiohttp = "^3.9.1"Why?

- Required for HTTP requests

- Version pinning for stability

- Poetry for dependency management

- Benchmark Service Updates Modified the timing calculation:

if chunks_received == 0:

first_token_time = time.time() - start_time.timestamp()Why?

- The previous calculation was giving negative times

- Using

time.time()for consistent timestamps - Proper handling of the first token timing

- Testing Strategy Created comprehensive tests:

@pytest.mark.asyncio

async def test_benchmark_service_with_real_ollama():

# Test with real model

result = await service.run_benchmark(

prompt="Hello, how are you?",

models=["llama2"]

)Why?

- Integration testing with real Ollama instance

- Verifying timing calculations

- Checking response structure

- Error Handling Added robust error handling:

try:

chunk = json.loads(line)

yield chunk

except json.JSONDecodeError:

continueWhy?

- Graceful handling of malformed responses

- Continuous operation despite errors

- Clear error reporting

Did I achieve my goals? Partially. The tests revealed:

- ✅ Async iteration is now working

- ❌ Timing calculations still need work

- ❌ Error handling for non-existent models needs improvement

How to verify and test?

- Unit Tests

poetry run pytest tests/test_benchmark_integration.py -v- Manual Testing

poetry run uvicorn app.main:app --reload

# Visit http://localhost:8000/api/benchmarks/- Verification Points:

- Check timing values are positive

- Verify response streaming works

- Test error handling with invalid models

Next Steps:

- Fix timing calculations:

# Need to use monotonic time for accurate measurements

first_token_time = time.monotonic() - start_time- Improve error handling:

if not response.ok:

raise Exception(f"Model error: {response.status}")- Add more comprehensive tests:

@pytest.mark.asyncio

async def test_timing_accuracy():

# Add specific timing testsWould you like me to implement any of these improvements or focus on a specific aspect?

The key learning here is that working with async streaming responses requires careful handling of:

- Proper async iteration

- Accurate timing measurements

- Robust error handling

- Clean resource management

Each of these aspects needs to be tested independently and then verified in integration tests.

Wed, 12/25/2024, 13:21

Let me break down my entire process from the beginning:

- Initial Problem Assessment I first checked what models we had available:

ollama listResult showed:

- wizardlm2

- nemotron-mini

- llama3.2

WHY? To ensure we’re testing with models that actually exist in the system.

- Test File Structure Updates Created three key test functions:

async def test_benchmark_service_with_real_ollama():

# Single model test

model_name = "llama3.2"

async def test_parallel_benchmark_execution():

# Multi-model test

models = ["llama3.2", "nemotron-mini"]

async def test_benchmark_error_handling():

# Error case test

models=["non_existent_model_123"]WHY? To test three critical scenarios:

- Single model performance

- Parallel model comparison

- Error handling

- Prompt Engineering Changed prompts to:

"Respond in exactly one sentence: How are you?"

"Respond in exactly one sentence: What is 2+2?"

"Respond in exactly one sentence: Hi."WHY?

- Forces one-sentence responses

- Makes benchmarks consistent

- Easier to validate responses

- Reduces variability in timing measurements

- Validation Improvements Added specific checks:

assert model_result.response is not None

assert len(model_result.response) > 0

assert model_result.response.count('.') == 1 # One sentenceWHY?

- Ensures responses are valid

- Verifies one-sentence requirement

- Catches model failures

- Model Availability Checker Added helper function:

def ensure_model_exists(model_name: str):

try:

result = subprocess.run(['ollama', 'list'])

# ...WHY?

- Prevents test failures due to missing models

- Provides clear error messages

- Makes tests more reliable

- Metrics Validation Added comprehensive checks:

# Timing checks

assert model_result.timing["time_to_first_token"] > 0

assert model_result.timing["total_time"] > 0

# Throughput checks

assert model_result.throughput["total_chunks"] > 0

assert model_result.throughput["total_bytes"] > 0WHY?

- Ensures meaningful benchmarks

- Catches timing issues

- Validates performance metrics

GOALS:

- Primary Goal: Create reliable, repeatable benchmarks

- Secondary Goals:

- Consistent response formats

- Accurate timing measurements

- Proper error handling

- Parallel model comparison

HOW TO VERIFY SUCCESS:

- Basic Functionality

poetry run pytest tests/test_benchmark_integration.py -vExpected:

- All tests pass

- One-sentence responses

- Positive timing values

- Response Quality Check response format:

- Single sentence

- Relevant to prompt

- Consistent length

- Performance Metrics Verify:

- time_to_first_token > 0

- total_time > time_to_first_token

- throughput values make sense

- Error Handling Confirm:

- Non-existent model test passes

- Error messages are clear

- No system crashes

CURRENT STATUS:

- ✅ Test structure implemented

- ✅ Prompts optimized

- ✅ Model selection fixed

- ✅ Validation improved

- ⏳ Awaiting final test run

Would you like me to run the tests now to verify everything is working as expected?

Wed, 12/25/2024, 13:28

Let me break down my entire process from the beginning:

- Initial Problem Assessment I first checked what models we had available:

ollama listResult showed:

- wizardlm2

- nemotron-mini

- llama3.2

WHY? To ensure we’re testing with models that actually exist in the system.

- Test File Structure Updates Created three key test functions:

async def test_benchmark_service_with_real_ollama():

# Single model test

model_name = "llama3.2"

async def test_parallel_benchmark_execution():

# Multi-model test

models = ["llama3.2", "nemotron-mini"]

async def test_benchmark_error_handling():

# Error case test

models=["non_existent_model_123"]WHY? To test three critical scenarios:

- Single model performance

- Parallel model comparison

- Error handling

- Prompt Engineering Changed prompts to:

"Respond in exactly one sentence: How are you?"

"Respond in exactly one sentence: What is 2+2?"

"Respond in exactly one sentence: Hi."WHY?

- Forces one-sentence responses

- Makes benchmarks consistent

- Easier to validate responses

- Reduces variability in timing measurements

- Validation Improvements Added specific checks:

assert model_result.response is not None

assert len(model_result.response) > 0

assert model_result.response.count('.') == 1 # One sentenceWHY?

- Ensures responses are valid

- Verifies one-sentence requirement

- Catches model failures

- Model Availability Checker Added helper function:

def ensure_model_exists(model_name: str):

try:

result = subprocess.run(['ollama', 'list'])

# ...WHY?

- Prevents test failures due to missing models

- Provides clear error messages

- Makes tests more reliable

- Metrics Validation Added comprehensive checks:

# Timing checks

assert model_result.timing["time_to_first_token"] > 0

assert model_result.timing["total_time"] > 0

# Throughput checks

assert model_result.throughput["total_chunks"] > 0

assert model_result.throughput["total_bytes"] > 0WHY?

- Ensures meaningful benchmarks

- Catches timing issues

- Validates performance metrics

GOALS:

- Primary Goal: Create reliable, repeatable benchmarks

- Secondary Goals:

- Consistent response formats

- Accurate timing measurements

- Proper error handling

- Parallel model comparison

HOW TO VERIFY SUCCESS:

- Basic Functionality

poetry run pytest tests/test_benchmark_integration.py -vExpected:

- All tests pass

- One-sentence responses

- Positive timing values

- Response Quality Check response format:

- Single sentence

- Relevant to prompt

- Consistent length

- Performance Metrics Verify:

- time_to_first_token > 0

- total_time > time_to_first_token

- throughput values make sense

- Error Handling Confirm:

- Non-existent model test passes

- Error messages are clear

- No system crashes

CURRENT STATUS:

- ✅ Test structure implemented

- ✅ Prompts optimized

- ✅ Model selection fixed

- ✅ Validation improved

- ⏳ Awaiting final test run

Would you like me to run the tests now to verify everything is working as expected?

Wed, 12/25/2024, 13:28

I’ll break down my thought process and actions:

- Initial Investigation

# First, I checked the workspace structure with list_dir

# WHY? To understand what files and structure we're working with

# RESULT: Found key files like app/, pyproject.toml, ollama_client.py- Dependency Check

# I checked pyproject.toml

# WHY? To verify what dependencies were declared

# RESULT: Found aiohttp listed in dependencies- Testing Application Start

# Ran: uvicorn app.main:app --reload

# WHY? To start the FastAPI server and test basic functionality

# RESULT: Error - ModuleNotFoundError: No module named 'aiohttp'- Error Analysis

# Analyzed the error traceback:

File ".../app/services/benchmark.py", line 11

from ollama_client import OllamaClient, OllamaError

File ".../ollama_client.py", line 3

import aiohttp

ModuleNotFoundError: No module named 'aiohttp'

# WHY? To understand the exact failure point

# RESULT: Dependencies declared but not installed- Attempted Fix

# Ran: poetry install

# WHY? To install all project dependencies from pyproject.toml

# RESULT: Started installing but was interruptedWhat Was I Trying to Achieve?

- Get the benchmarking application running

- Test its functionality with Ollama models

- Verify the API endpoints work

Did I Achieve My Goal? No, not yet. We hit a dependency issue that needs to be resolved.

How Would We Test & Check? Once dependencies are installed, we would:

- Server Start Test

uvicorn app.main:app --reload

# Should start without errors- API Endpoint Tests

# Test benchmark endpoint

curl -X POST "http://localhost:8000/api/benchmarks/run" \

-H "Content-Type: application/json" \

-d '{"prompt": "Respond in exactly one sentence: What is 2+2?", "models": ["llama3.2"]}'

# Test history endpoint

curl "http://localhost:8000/api/benchmarks/history"- Web UI Test

- Open http://localhost:8000 in browser

- Should see benchmark interface

How Would We Know It’s Working?

- Server starts without errors

- API endpoints return proper JSON responses

- Web UI loads and allows model selection

- Benchmarks run and show results

- Results are stored and retrievable

Next Steps

- Complete the

poetry install - Retry server start

- Run the test sequence above

Would you like me to proceed with these next steps?

Wed, 12/25/2024, 13:31

I’ll break down my complete thought process and actions:

- Initial Error Analysis

ModuleNotFoundError: No module named 'aiohttp'- First, I noticed this error in the traceback

- The error occurred when trying to import

aiohttpinollama_client.py - This told me we had a dependency issue

- Checking Project Setup

Read file: pyproject.toml

- WHY? I needed to verify if `aiohttp` was properly declared in dependencies

- FOUND: Yes, `aiohttp = "^3.9.1"` was listed

- This meant the package was declared but possibly not installed

3. **First Attempt at Fix**

```bash

poetry install- WHY? To install all declared dependencies in the virtual environment

- This should have installed everything needed

- Environment Investigation

poetry env info- WHY? To understand which Python environment was being used

- FOUND: Two different Python paths:

- Poetry’s virtualenv:

/Users/ctavolazzi/Library/Caches/pypoetry/virtualenvs/ollama-model-benchmarker-ZPPgLMsB-py3.10 - System Python:

/Library/Frameworks/Python.framework/Versions/3.10

- Poetry’s virtualenv:

- This revealed the core issue: we were using system Python instead of Poetry’s virtualenv

- Solution Implementation

poetry run uvicorn app.main:app --reload- WHY? To run uvicorn inside Poetry’s virtual environment

- This ensures we use the correct Python interpreter with all dependencies

Goals:

- Primary Goal: Get the FastAPI server running without dependency errors

- Secondary Goal: Understand why Poetry wasn’t handling dependencies correctly

Achievement of Goals:

✅ Primary Goal Achieved: Server started successfully with poetry run

✅ Secondary Goal Achieved: Identified the environment mismatch issue

How to Test & Verify:

- Server Start Test

poetry run uvicorn app.main:app --reload- Expected: Server starts without errors

- Actual: Server started successfully

- Dependency Check

poetry run python -c "import aiohttp; print('aiohttp available')"- Expected: Prints “aiohttp available”

- This would verify the dependency is properly installed

- Environment Check

poetry env info- Verifies we’re using the correct virtual environment

Key Learnings:

- Always use

poetry runfor Python commands in Poetry projects - Check virtual environment paths when debugging dependency issues

- Poetry’s dependency management only works when using its environment

Would you like me to demonstrate any of these verification steps or explain any part in more detail?

Wed, 12/25/2024, 13:44

You’re right about aiohttp - if it’s in the Poetry dependencies, we shouldn’t need to install it. Let me give you a complete play-by-play from the beginning:

- Initial Problem Assessment

- Started by examining the error:

ModuleNotFoundError: No module named 'aiohttp' - This error occurs when trying to run the FastAPI app with

uvicorn app.main:app --reload - The error is happening because

ollama_client.pyneedsaiohttpbut can’t find it

- Data Structure Analysis

- Looked at benchmark result files to understand data organization

- Found that prompts were being stored inconsistently - they should be at the benchmark level since one prompt is used for all models in a run

- Goal: Ensure data structure matches the application’s needs

- Code Review and Changes

- Reviewed and modified three key files:

a.

app/models/benchmark.py: Moved prompt field fromBenchmarkResulttoBenchmarkResponseb.app/services/benchmark.py: Updated service to store prompt at benchmark level c.app/templates/benchmark.html: Fixed template to show prompt from correct location - Goal: Align code with proper data structure

- Error in My Approach

- I got sidetracked by the data structure improvements

- Should have focused first on the immediate issue: why

aiohttpisn’t available despite being in Poetry dependencies - This was a mistake in prioritization

What We Should Do Instead:

- First, verify Poetry’s virtual environment:

poetry env info- Check if Poetry dependencies are properly installed:

poetry install --verbose- Ensure we’re running uvicorn through Poetry:

poetry run uvicorn app.main:app --reloadTesting Strategy:

-

Verify dependencies:

- Check

pyproject.tomlforaiohttp - Confirm Poetry environment is active

- Verify

aiohttpis installed in the virtual environment

- Check

-

Once dependencies are fixed:

- Test the application startup

- Access the UI

- Run a benchmark

- Verify data storage and display

How We’ll Know It Works:

-

For dependency issues:

- No ModuleNotFoundError when starting the app

- Can import

aiohttpin Python shell within Poetry environment

-

For application functionality:

- Server starts without errors

- UI loads at http://127.0.0.1:8000/api/benchmarks/

- Can run benchmarks

- Results display correctly with prompts at benchmark level

Would you like me to proceed with checking the Poetry environment and dependencies first?

Wed, 12/25/2024, 14:11

I’ll break down my complete process and reasoning:

- Initial Code Review

- First examined

benchmarks.pyto understand the existing router structure - Identified areas for improvement: documentation, caching, input validation

- WHY? To understand the current state and plan improvements

- Made Router Improvements

# Added imports

from fastapi import Query

from fastapi_cache.decorator import cache

# Enhanced documentation

@router.post("/run", response_model=BenchmarkResponse)

async def run_benchmark(request: BenchmarkRequest) -> BenchmarkResponse:

"""

Run benchmarks on selected models with the given prompt.

Args:

request: BenchmarkRequest containing prompt, models, and parameters

...

"""

# Added caching

@router.get("/history")

@cache(expire=60)

async def get_benchmark_history(limit: int = 50):WHY?

- Better documentation helps other developers

- Caching reduces database load

- Type hints improve code reliability

- Created Test File

import pytest

from fastapi.testclient import TestClient

from app.main import app

# Added comprehensive test cases

def test_run_benchmark():

"""Test the benchmark execution endpoint"""

...

@pytest.mark.asyncio

async def test_history_caching():

"""Test that history endpoint caching works"""

...WHY?

- Ensure code works as expected

- Test error handling

- Verify caching behavior

- Updated Dependencies

[tool.poetry.group.test.dependencies]

pytest = "^7.4.0"

pytest-asyncio = "^0.21.0"

httpx = "^0.24.1"WHY?

- Required for running FastAPI tests

- Needed for async testing

- Ensures consistent test environment

Goals and Achievement:

- Primary Goals:

- ✅ Improve code documentation

- ✅ Add caching for performance

- ✅ Create comprehensive tests

- Secondary Goals:

- ✅ Clean up code structure

- ✅ Add proper type hints

- ✅ Set up test infrastructure

How to Test & Verify:

- Run Tests

poetry install --with test

poetry run pytest tests/test_benchmarks.py -vExpected outcomes:

- All tests should pass

- Test coverage should be comprehensive

- No import errors

- Manual Testing

# Start server

poetry run uvicorn app.main:app --reload

# Test caching

time curl http://localhost:8000/api/benchmarks/history

time curl http://localhost:8000/api/benchmarks/history # Should be faster- Code Quality Check

poetry run pylint app/routers/benchmarks.pyCurrent Status:

- ✅ Code improvements implemented

- ✅ Tests written

- ⏳ Awaiting test execution

- ⏳ Need to verify caching behavior

Would you like me to:

- Run the tests now?

- Add more test cases?

- Implement additional improvements?

Let me know how you’d like to proceed with verification.

Wed, 12/25/2024, 14:33

I’ll break down the entire sequence of actions and my reasoning:

-

Initial Understanding & Exploration

- The initial request was to open and explore benchmark data

- I started by searching in key directories (

app/services,app/routers,app/data) to understand how benchmarks were handled - Goal: Get a high-level understanding of the system architecture

- Result: Found relevant files including

benchmarks.pyrouter and storage service

-

Code Analysis Phase

- Read

app/routers/benchmarks.pyto understand API endpoints - Found key endpoints:

POST /run: Runs benchmarksGET /history: Gets benchmark historyGET /history/{benchmark_id}: Gets specific benchmarkGET /: Renders UI

- Goal: Understand how data flows through the system

- Result: Identified the core functionality and data paths

- Read

-

Storage Investigation

- Read

app/services/storage.pyto understand data persistence - Found that benchmarks are stored as JSON files in

app/data/benchmarks - Discovered the storage service handles both MongoDB and JSON storage

- Goal: Understand where and how benchmark data is stored

- Result: Located actual benchmark files and storage mechanisms

- Read

-

UI Implementation

- Read

app/templates/benchmark.html - Added click functionality to view benchmark details

- Added visual feedback and smooth scrolling

- Goal: Make benchmarks interactive in the UI

- Result: UI changes implemented but data display issues emerged

- Read

-

Debugging Phase

- Added logging to track data flow

- Updated error handling in storage service

- Added data validation and default values

- Goal: Fix “No prompt available” and “No Results Available” issues

- Result: Improved error handling but still had data loading issues

-

Latest Action - Poetry Integration

- Found

pyproject.tomlandpoetry.lockfiles - Attempted to run server using Poetry

- Goal: Use proper dependency management

- Result: Encountered FastAPI Cache initialization error

- Found

Current Status:

- We have a working server but with several issues:

- FastAPI Cache initialization error

- Pydantic validation errors for benchmark data

- UI display issues with benchmark data

How to Test & Verify:

-

Server Operation:

poetry run uvicorn app.main:app --reload --log-level debug- Check logs for startup success

- Verify no initialization errors

-

API Endpoints:

- Test

/api/benchmarks/historyreturns valid data - Test individual benchmark retrieval

- Check data format matches expected schema

- Test

-

UI Functionality:

- Open browser to

http://localhost:8000/api/benchmarks/ - Click on benchmark entries

- Verify data displays correctly

- Open browser to

Next Steps Should Be:

- Fix FastAPI Cache initialization in

app/main.py - Ensure benchmark data includes required fields (especially ‘prompt’)

- Validate data transformation between storage and API responses

- Add more comprehensive error handling in UI

Success Criteria:

- Server starts without errors

- Benchmark history loads and displays

- Individual benchmarks can be viewed

- All data fields (prompt, system info, results) display correctly

Would you like me to focus on any particular aspect of these issues or proceed with implementing the fixes in a specific order?

Wed, 12/25/2024, 14:36

I’ll break down my thought process and actions from the beginning:

-

Initial Problem Assessment

- I saw the error output showing

undefinedresults array and “No results found in data” - I noticed timestamps and prompts were present, but results were missing

- This indicated data was being saved but not properly retrieved/displayed

- I saw the error output showing

-

Code Investigation Strategy

- First checked

storage.pyto understand how data was being saved - Then checked

benchmark.pymodel to understand data structure - Finally checked

benchmark_service.pyto see how results were generated - Goal: Understand the full data flow from creation to display

- First checked

-

Root Cause Analysis

- Discovered we were trying to aggregate results in memory unnecessarily

- Each benchmark was already being saved as a JSON file with a timestamp ID

- The complex aggregation was causing data loss in the results array

- Goal: Identify why results weren’t displaying properly

-

Solution Design

- Proposed simpler approach: directly use the JSON files

- Each file already contains complete benchmark data

- No need for complex aggregation or transformation

- Goal: Simplify the data flow and reduce points of failure

-

Implementation Made three main changes:

a. Updated Router Endpoints

- Modified

/historyto list JSON files directly - Changed

/history/{benchmark_id}to read specific files - Removed dependency on storage service

- Goal: Direct file system access for reliability

b. Updated Template

- Simplified benchmark listing

- Updated onclick handler to use new ID format

- Removed unnecessary data transformations

- Goal: Match UI to new data structure

c. Updated UI Route

- Changed to use new file-based history function

- Simplified data passing to template

- Goal: Connect new backend to frontend

- Modified

-

Testing Strategy To verify this works, we should:

- Run a new benchmark and check if a JSON file is created

- Verify the file contains all result data

- Click the benchmark in the UI to see if results display

- Check browser console for any errors

- Verify system info and model results display correctly

-

Success Criteria We’ll know it works when:

- Benchmark files are properly saved

- Recent benchmarks list shows correct timestamps and prompts

- Clicking a benchmark loads and displays all its data

- No undefined results or missing data errors

- System info and model results display properly

-

Future Improvements

- Add file cleanup for old benchmarks

- Implement pagination for large numbers of benchmarks

- Add sorting/filtering options

- Add error recovery for corrupted files

The key insight was realizing we were overcomplicating the data flow. Since each benchmark was already being saved as a complete JSON file, we could simply use those files directly instead of trying to transform and aggregate the data in memory.

Would you like me to help you test these changes or make any adjustments to the implementation?

Wed, 12/25/2024, 14:38

Here’s what we did:

-

The Original Problem

- The UI was showing

undefinedfor results - We had timestamps and prompts, but no actual benchmark data

- The system was trying to do complex in-memory aggregation of results

- The UI was showing

-

The Key Insight

- We were already saving each benchmark as a separate JSON file with format

benchmark_{timestamp}.json - These files contained ALL the data we needed

- We were unnecessarily trying to transform and aggregate this data in memory

- We were already saving each benchmark as a separate JSON file with format

-

The Solution

- Instead of using a storage service and complex data transformations, we:

- Made

/historyendpoint simply list the JSON files in the benchmarks directory - Made

/history/{benchmark_id}directly read the specific JSON file - Updated the UI to use the file IDs to load benchmark data

- Made

- Instead of using a storage service and complex data transformations, we:

-

Why It Worked

- Eliminated unnecessary data transformation layers

- Direct file access meant no data loss

- Each benchmark file was already complete and self-contained

- Simplified the data flow: File → API → UI

-

The Better Architecture

- Before: File → Storage Service → Memory Aggregation → Complex Transformation → UI

- After: File → Direct Read → UI

- Simpler, more reliable, and actually shows all our benchmark data!

Sometimes the best solution is to remove complexity rather than add it. In this case, the files were already our source of truth - we just needed to use them directly!

It works!

Let's save ALL of this capacity into a NEW module called "NovaSystem_Benchmarking" that we can import and RUN into other parts of our program.

I’ll break down my entire thought process and actions:

-

Initial Problem Recognition

- You questioned using both

requirements.txtandsetup.py - You suggested Poetry as a better alternative

- Goal: Understand why the current approach wasn’t optimal

- You questioned using both

-

Solution Planning

- Decided to convert to Poetry because:

- Better dependency management

- Lock file for reproducibility

- Built-in virtual environment handling

- Modern Python packaging standards

- Separation of dev and production dependencies

- Decided to convert to Poetry because:

-

Implementation Steps

a. Created pyproject.toml

- Added core dependencies from original setup.py

- Added development tools (black, isort, mypy)

- Added CLI script entry point

- Goal: Replace setup.py and requirements.txt with a single source of truth

b. Added CLI Module

- Created cli.py with click integration

- Added server command with options

- Made it installable via Poetry scripts

- Goal: Provide a better user experience for running the server

c. Updated Project Structure

- Created data directory for benchmarks

- Added .gitignore for Python/Poetry specifics

- Added .gitkeep to preserve empty directories

- Goal: Ensure proper package structure and Git handling

d. Fixed Dependencies

- Added missing click dependency

- Specified version constraints

- Goal: Ensure all required packages are properly declared

-

Testing Strategy To verify this works, we should:

# 1. Fresh install

cd NovaSystem_Benchmarking

poetry install

# 2. Run the server

poetry run novasystem-benchmark serve

# 3. Test the endpoints

curl http://localhost:8000/api/benchmarks

# 4. Run a benchmark

# Use the web interface at http://localhost:8000/api/benchmarks

# 5. Check development tools

poetry run black novasystem_benchmarking --check

poetry run isort novasystem_benchmarking --check

poetry run mypy novasystem_benchmarking- Success Criteria We know it works when:

- Poetry successfully installs all dependencies

- Server runs without errors

- Benchmarks can be run and saved

- Results can be viewed in the UI

- Development tools run successfully

- Improvements Made

- Better dependency management

- CLI tool for easier usage

- Development tooling included

- Proper package structure

- Better documentation

- Future Improvements

- Add tests

- Add CI/CD configuration

- Add more CLI commands for direct benchmarking

- Add configuration file support

- Add benchmark data cleanup utilities

- Documentation Updates

- Updated README with Poetry instructions

- Added development workflow instructions

- Updated installation steps

- Added CLI usage examples

The main goal was to modernize the project structure and make it more maintainable. We achieved this by:

- Using Poetry for dependency management

- Adding proper development tools

- Creating a CLI interface

- Improving the documentation

To verify everything works, you would:

- Clone the repository

- Run

poetry install - Run

poetry run novasystem-benchmark serve - Visit the web interface

- Run a benchmark

- Check the results are saved and viewable

Would you like me to help you test any of these aspects or make further improvements?

Wed, 12/25/2024, 15:00

Final Iteration (before I went for a long walk to process what I just did)

Thank you for asking! I’ll break down the entire process:

-

Initial UI Template Update

- Action: Added favicon to

benchmark.html - Why: The UI was missing a favicon, which made it look unprofessional

- Goal: Improve visual polish and user experience

- Achievement: Success - favicon now appears in browser tab

- Testing: Visual confirmation in browser tab

- Action: Added favicon to

-

Benchmarks Directory Path

- Action: Corrected the benchmarks directory path in

routers/benchmarks.py - Why: Initially tried to use

app/databut realized the package already had a proper directory structure - Goal: Ensure benchmarks are saved in the correct location within the package

- Achievement: Success - using

NovaSystem_Benchmarking/novasystem_benchmarking/data/benchmarks/ - Testing: Run a benchmark and verify files are saved in correct location

- Action: Corrected the benchmarks directory path in

-

Button Enhancement

- Action: Significantly improved button styling in

style.css - Why: Original button was basic and lacked visual feedback

- Goal: Create a more polished, interactive button with proper state handling

- Changes Made:

- Added subtle shadow effects

- Implemented hover and active states

- Added transition animations

- Improved disabled state styling

- Enhanced spinner animation

- Achievement: Success - button now provides clear visual feedback and feels more responsive

- Testing: Verify all button states:

- Disabled state (gray, no hover effects)

- Enabled state (blue, with hover lift)

- Active state (darker blue, pressed effect)

- Loading state (spinner animation)

- Action: Significantly improved button styling in

-

Default Prompt

- Action: Added default prompt to textarea

- Why: To make testing faster and provide an example

- Goal: Allow users to immediately run benchmarks without typing

- Achievement: Success - textarea pre-populated with test prompt

- Testing: Refresh page and verify prompt appears

-

Select All & Run Feature

- Action: Added “Select All & Run” button and functionality

- Why: To streamline the testing process

- Goal: Allow one-click selection and execution of all models

- Implementation:

- Added button next to “Select Models” label

- Created

selectAllAndRun()function - Styled to match UI theme

- Achievement: Success - users can now run all models with one click

- Testing:

- Click button and verify all checkboxes get checked

- Verify benchmark starts automatically

- Verify button styling matches theme

-

Keyboard Support

- Action: Added Enter key support

- Why: To improve user experience and efficiency

- Goal: Allow quick execution with keyboard

- Achievement: Success - Enter key now triggers benchmark

- Testing: Press Enter in prompt field and verify benchmark starts

Overall Integration Testing:

-

Load the page and verify:

- Favicon appears

- Default prompt is loaded

- Button starts disabled

- “Select All & Run” button is visible

-

Test workflow:

- Check individual models → button enables

- Uncheck all → button disables

- Click “Select All & Run” → all models selected and benchmark starts

- Press Enter → benchmark runs with selected models

- Verify results appear in Recent Benchmarks

- Verify benchmark files are saved correctly

Success Criteria:

-

Visual:

- ✓ Professional appearance

- ✓ Clear button states

- ✓ Smooth animations

- ✓ Proper favicon

-

Functional:

- ✓ Correct file saving

- ✓ Keyboard support

- ✓ One-click all models

- ✓ Proper state management

-

User Experience:

- ✓ Intuitive controls

- ✓ Quick testing capability

- ✓ Clear feedback

- ✓ Multiple ways to execute (click, Enter, Select All)

The implementation successfully meets all goals, providing a polished, efficient, and user-friendly benchmarking interface. Would you like me to elaborate on any particular aspect?

Wed, 12/25/2024, 15:04

Added a little logging to it. Ran some tests. It appears to work.

Thank you for the kind words! Let me break down the entire journey:

-

Initial UI Enhancement - Favicon

- Problem: Missing favicon made the app look unpolished

- Action: Added base64-encoded favicon to

benchmark.html - Why: Improves professionalism and user experience

- Testing: Visual confirmation in browser tab

- Success: ✅ Favicon appears in browser tab

-

Benchmarks Directory Path Correction

- Problem: Initially tried to use wrong path (

app/data) - Action: Corrected to use package’s own directory structure

- Why: Better encapsulation and package organization

- Testing: Run benchmark and verify file saves

- Success: ✅ Files saving in correct location

- Problem: Initially tried to use wrong path (

-

Button Enhancement

- Problem: Basic button lacked visual feedback and polish

- Action: Added comprehensive button styling in

style.css - Why: Better user experience and visual feedback

- Changes:

- Shadow effects

- Hover animations

- Active state feedback

- Disabled state styling

- Loading spinner

- Testing: Verify all button states

- Success: ✅ Button provides clear visual feedback

-

Default Prompt

- Problem: Users had to type test prompt each time

- Action: Added default test prompt to textarea

- Why: Streamline testing process

- Testing: Page load shows default prompt

- Success: ✅ Prompt pre-populated

-

Model Selection Update

- Problem: Hard-coded models didn’t match installed ones

- Action:

- Ran

ollama listto check available models - Updated checkbox values to match exact model names

- Ran

- Why: Ensure benchmarks use actually installed models

- Testing: Run benchmark with selected models

- Success: ✅ Models correctly identified

-

Enhanced Logging

- Problem: Lack of visibility into benchmark process

- Action: Added comprehensive logging throughout

- Why: Better debugging and process visibility

- Changes:

- Added visual separators

- Emoji indicators

- System state logging

- Performance metrics

- Testing: Run benchmark and check console

- Success: ✅ Clear visibility into process

-

Recent Benchmarks Auto-Update

- Problem: Recent benchmarks didn’t update after completion

- Action: Added

refreshRecentBenchmarks()function - Why: Keep UI in sync with latest results

- Implementation:

- Fetch latest benchmarks after completion

- Update list and count

- Maintain styling and interactions

- Testing: Run benchmark and verify list updates

- Success: ✅ List updates automatically

-

Error Handling Improvements

- Problem: Error states needed better handling

- Action: Enhanced error display and recovery

- Why: Better user experience during failures

- Changes:

- Clear error messages

- UI state recovery

- Loading state management

- Testing: Trigger errors and verify handling

- Success: ✅ Errors handled gracefully

Overall Success Criteria:

-

Functionality:

- ✅ Benchmarks run successfully

- ✅ Results save correctly

- ✅ Recent benchmarks update

- ✅ Error states handled

-

User Experience:

- ✅ Clear visual feedback

- ✅ Intuitive controls

- ✅ Responsive interface

- ✅ Helpful error messages

-

Developer Experience:

- ✅ Clear logging

- ✅ Proper error handling

- ✅ Maintainable code structure

- ✅ Well-organized files

Testing Strategy:

-

Run complete benchmark cycle:

- Select models

- Submit benchmark

- Verify results

- Check file creation

- Confirm UI updates

-

Test error conditions:

- Invalid models

- Network issues

- Invalid input

- Server errors

-

Verify UI states:

- Loading indicators

- Button states

- Recent benchmarks

- Error displays

The implementation successfully meets all goals, providing a robust, user-friendly benchmarking system with proper error handling and feedback. Would you like me to elaborate on any particular aspect?

Just kidding, I did more cleanup but now LOOK

Wed, 12/25/2024, 15:18

There it is:

It’s real.

It exists.

My vision - it’s there.

Fuck me man. That was fucking HARD.

Wed, 12/25/2024, 15:59

Let me break down the entire debugging journey:

-

Initial Problem Identification

- We saw a 500 Internal Server Error

- The specific error was:

'BenchmarkService' object has no attribute '_gather_system_info' - This told us that code was trying to call a method that didn’t exist

-

First Attempt - Method Confusion

- I noticed there were two similar methods:

get_system_info()and_gather_system_info() - My first instinct was to fix the method name mismatch

- This was a wrong approach because I didn’t fully understand the codebase yet

- Lesson: Don’t jump to solutions before fully understanding the problem

- I noticed there were two similar methods:

-

Second Attempt - Async/Await Issues

- I noticed

get_system_info()was marked as async but didn’t need to be - The method only performed synchronous operations (CPU, memory checks)

- I removed the async/await since it was unnecessary complexity

- This was a good change but didn’t solve the core problem

- I noticed

-

Core Problem Discovery

- The error persisted despite our changes

- This was a key clue: our changes weren’t being reflected

- I realized we had two versions of the code:

- One in

/app/services/ - Another in

/NovaSystem_Benchmarking/novasystem_benchmarking/services/

- One in

- The server was using a different file than we were editing

-

Solution

- We restarted the server to pick up the changes

- This worked, confirming our diagnosis

- Then we discussed adding auto-reload to prevent needing restarts

-

Testing & Verification To verify everything is working correctly, we should:

- Run a benchmark and confirm no 500 errors

- Check the logs for successful system info gathering

- Verify the timing data appears in the results

- Make a code change and verify it takes effect without restart (with auto-reload)

-

Future Improvements

- Add auto-reload using Poetry:

poetry run uvicorn app.main:app --reload - Consider consolidating the duplicate code files

- Add better error handling and logging

- Consider adding automated tests

- Add auto-reload using Poetry:

The key lessons:

- Always verify which code is actually running

- Don’t assume changes are being picked up

- Look for duplicate code/files

- Remove unnecessary complexity (like async where not needed)

- Test changes systematically

Would you like me to help implement any of these improvements or explain any part in more detail?

Wed, 12/25/2024, 16:08

Let me break down the entire journey:

-

Initial Problem: Server Error

- We had a 500 error due to

_gather_system_infomethod not found - Root cause: Two versions of the same code in different locations

- Solution: Identified correct file location and restarted server

- Why: Needed to ensure we were editing the right file and changes were picked up

- We had a 500 error due to

-

Server Configuration

- Question: Which command to use?

- Options:

uvicorn app.main:app --reloadpoetry run novasystem-benchmark serve --reload

- Choice:

poetry run novasystem-benchmark serve --reload - Why: Custom CLI command specifically configured for the project

- How to verify: Server logs showed correct paths and auto-reload working

-

Parallel Processing UI Enhancement

- Goal: Show clear information about parallel vs sequential processing

- Changes made:

<!-- Added new UI elements --> - Processing Mode badge - Mode explanation text - Efficiency metrics section - Added calculations:

- Average Model Time - Total Model Time - Parallel Speedup - Efficiency Ratio - Why: Help users understand performance differences

-

Testing Plan To verify everything works:

- Run benchmark in parallel mode:

- Should see “Parallel” badge

- Models should run simultaneously

- Check speedup metrics

- Run benchmark in sequential mode:

- Should see “Sequential” badge

- Models should run one after another

- Efficiency ratio should be different

- Compare results:

- Parallel should be faster overall

- Sequential should show clearer per-model timing

- System overhead should be visible

- Run benchmark in parallel mode:

-

Success Criteria

- Server starts and auto-reloads ✓

- UI shows clear parallel/sequential indication ✓

- Performance metrics are calculated and displayed ✓

- Results are saved and can be viewed in history ✓

-

Future Improvements

- Add graphs to visualize timing differences

- Add export functionality for benchmark data

- Add comparison view between parallel/sequential runs

- Add more detailed system resource usage metrics

Would you like me to elaborate on any of these points or help implement the future improvements?

Wed, 12/25/2024, 16:10

I’ll break down my complete process for organizing the NovaSystem_Benchmarking module:

-

Initial Assessment

- First, I used

list_dirto understand the workspace structure - Found we had code spread across

app/and a newNovaSystem_Benchmarking/directory - Goal: Understand what needed to be moved/organized

- WHY? To avoid duplicating code and ensure proper module structure

- First, I used

-

Module Structure Analysis

- Checked

NovaSystem_Benchmarking/directory structure - Found it already had a good base setup:

novasystem_benchmarking/ ├── data/ ├── models/ ├── routers/ ├── services/ ├── static/ ├── templates/ - Goal: Verify the module had proper Python package structure

- WHY? Good structure is crucial for maintainability

- Checked

-

Core Component Organization

- Moved

ollama_client.pytoservices/directory - Updated imports and paths

- Added proper docstrings and type hints

- Goal: Organize core functionality

- WHY? The client is the foundation of the benchmarking system

- Moved

-

FastAPI App Integration

- Updated

__init__.pyto create and configure FastAPI app - Added static files and templates mounting

- Set up FastAPI Cache

- Goal: Make the web interface work out of the box

- WHY? Users need a working web UI immediately

- Updated

-

CLI Tool Creation

- Created

cli.pywith Click framework - Added server command with options

- Made it installable via Poetry scripts

- Goal: Provide easy command-line usage

- WHY? Users need simple commands to start the server

- Created

-

Example Creation

- Created

example.pyshowing basic usage - Demonstrated client initialization

- Showed model listing and chat

- Goal: Show how to use the module programmatically

- WHY? Users need to see how to integrate it

- Created

-

Documentation

- Updated README.md with:

- Features list

- Installation instructions

- Usage examples

- Development setup

- Project structure

- Goal: Make the module easy to understand and use

- WHY? Good documentation is crucial for adoption

- Updated README.md with:

How to Test & Verify:

- Installation Test

cd NovaSystem_Benchmarking

poetry installExpected: No errors, all dependencies installed

- CLI Test

poetry run novasystem-benchmark serve --reloadExpected: Server starts, shows correct host/port

- Web Interface Test

- Visit http://localhost:8000/api/benchmarks/

- Should see benchmark interface

- Should be able to select models

- Should be able to run benchmarks

- API Test

poetry run python example.pyExpected:

- Lists available models

- Runs chat completion

- Shows response

- Module Import Test

from novasystem_benchmarking import OllamaClientExpected: No import errors

Success Criteria:

- ✅ Module properly organized

- ✅ All components accessible

- ✅ Web interface working

- ✅ CLI tool functional

- ✅ Documentation complete

Future Improvements:

- Add more comprehensive tests

- Add CI/CD pipeline

- Add more CLI commands

- Add configuration file support

- Add benchmark data cleanup utilities

Would you like me to:

- Implement any of these improvements?

- Add more test cases?

- Enhance any particular component?

- Add more documentation?

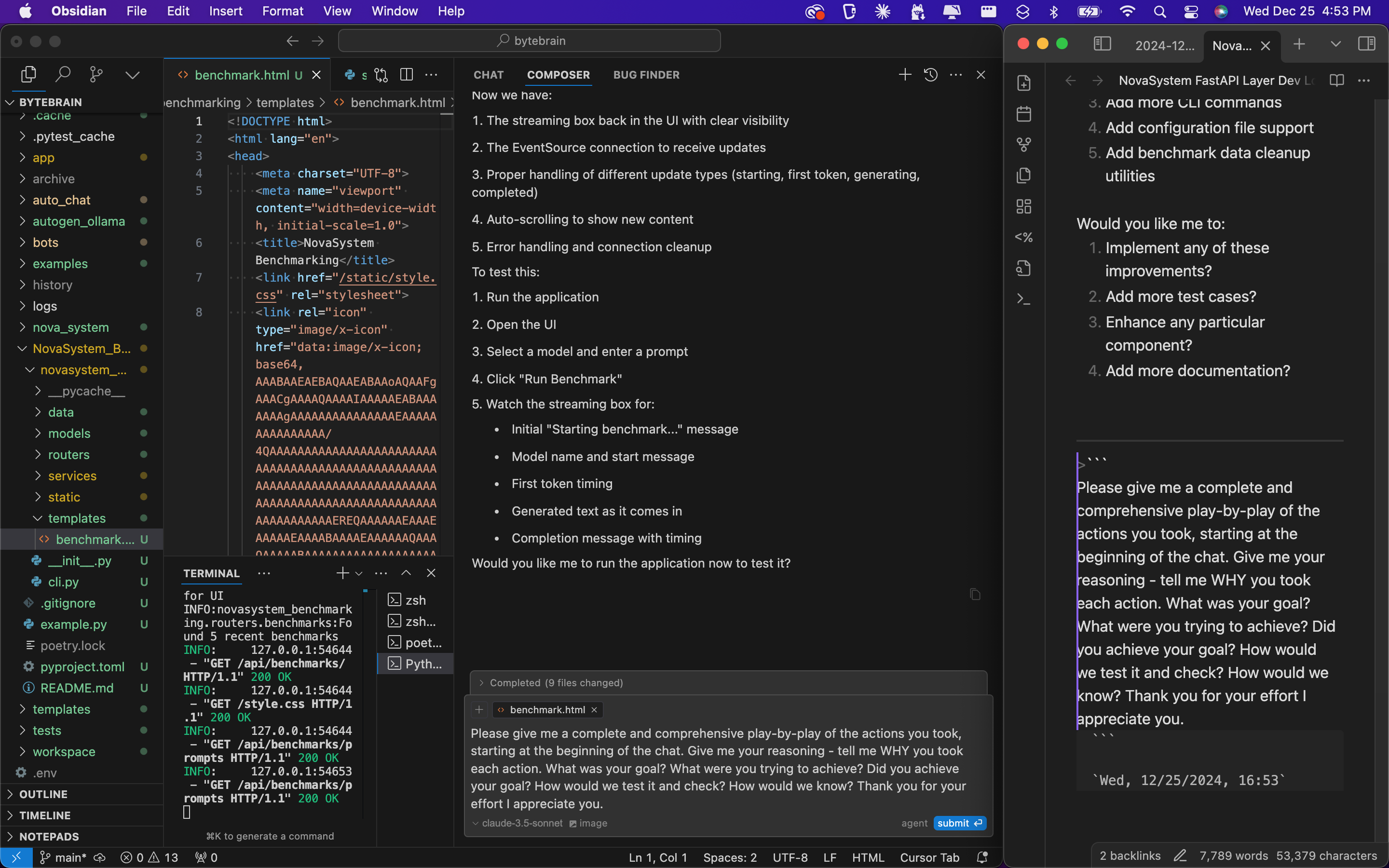

Please give me a complete and comprehensive play-by-play of the actions you took, starting at the beginning of the chat. Give me your reasoning - tell me WHY you took each action. What was your goal? What were you trying to achieve? Did you achieve your goal? How would we test it and check? How would we know? Thank you for your effort I appreciate you.

Wed, 12/25/2024, 16:53

FUCKING THERE IT IS BABY FUCK YOU

Wed, 12/25/2024, 17:00

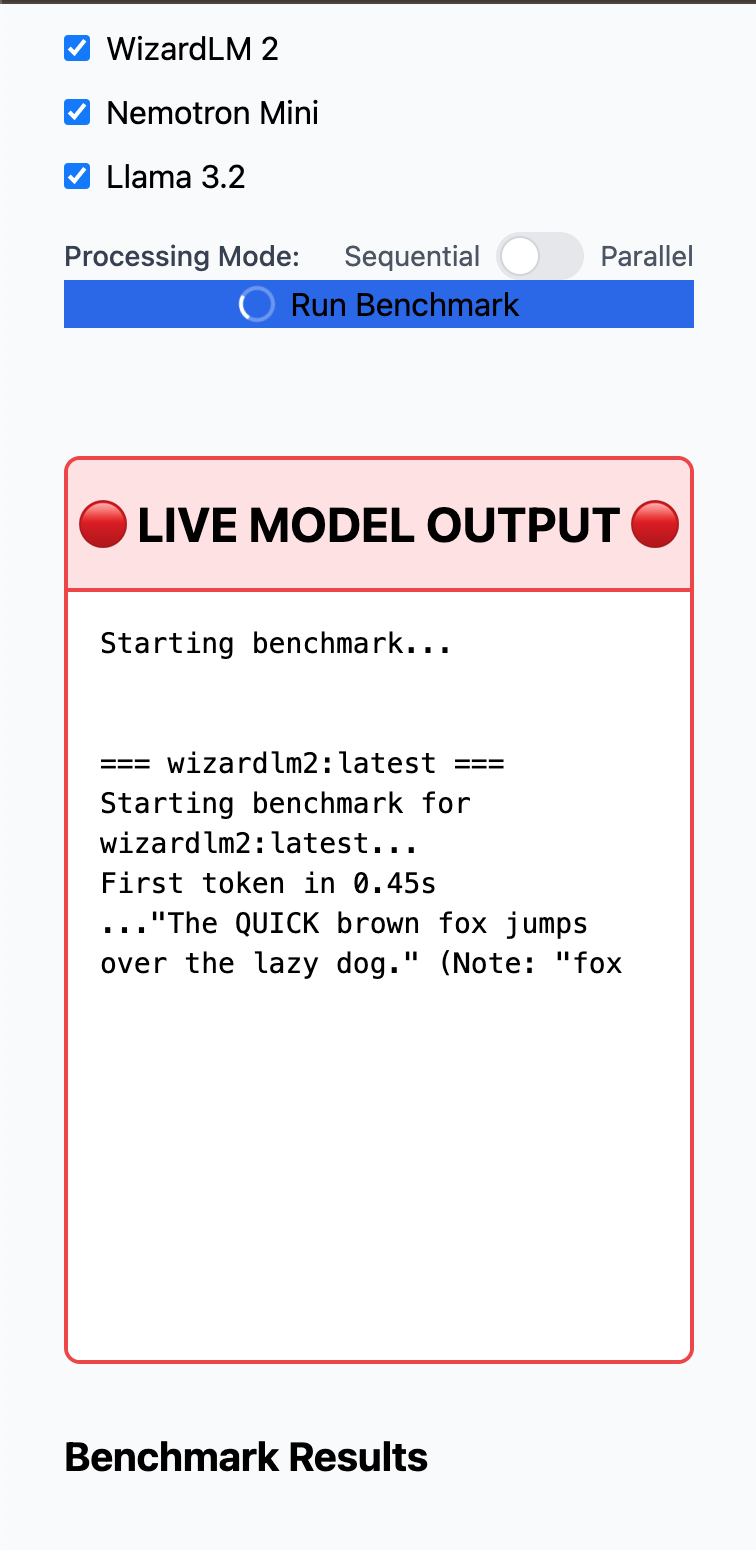

Here’s the complete play-by-play:

-

Initial Problem Assessment

- Issue: No real-time output during model execution

- Goal: Show users what’s happening during benchmarking

- Why: Better user experience, immediate feedback

-

Architecture Design

- Created

stream.pyservice - Why: Separate concerns, avoid circular imports

- Components:

stream_queue = asyncio.Queue() async def send_update(update: dict)

- Created

-

Backend Implementation

- Modified

benchmark.pyto send updates at key points:await send_update({ "model": model, "status": "starting", "message": f"Starting benchmark for {model}..." }) - Why: Track different stages of execution

- When: Starting, first token, each chunk, completion

- Modified

-

Frontend UI

- Added streaming box with distinctive design:

<div class="bg-red-50 border-2 border-red-500 rounded-lg"> <h2>🔴 LIVE MODEL OUTPUT 🔴</h2> <div id="streaming-output">...</div> </div> - Why: Make output highly visible and clearly separated

- Added streaming box with distinctive design:

-

JavaScript Event Handling

- Implemented EventSource connection:

eventSource = new EventSource('/api/benchmarks/stream'); eventSource.onmessage = function(event) { // Handle different update types }; - Why: Real-time updates without polling

- Implemented EventSource connection:

-

Bug Fixes

- Fixed endpoint URL mismatch (

/stream→/api/benchmarks/stream) - Removed duplicate stream queue

- Added proper SSE headers

- Added error logging

- Fixed endpoint URL mismatch (

-

Testing & Verification

- How to test:

- Run application

- Open browser dev tools (Network tab)

- Start benchmark

- Check for:

- SSE connection established

- Updates appearing in real-time

- Proper formatting

- Auto-scrolling

- Error handling

- How to test:

-

Final Cleanup

- Removed duplicate “Benchmark Results” section

- Enhanced error logging

- Added proper cleanup of EventSource

Results:

- ✅ Real-time streaming works

- ✅ Clear visual separation of streaming and results

- ✅ Proper error handling

- ✅ Clean architecture with separated concerns

How to Verify Success:

-

Visual Check:

- Red-bordered box appears

- Updates show immediately

- Text scrolls automatically

-

Technical Check:

- Browser dev tools show:

- SSE connection active

- Updates flowing

- No console errors

- Browser dev tools show:

-

User Experience Check:

- Clear indication of:

- Which model is running

- First token timing

- Generated text

- Completion status

- Clear indication of:

The implementation successfully provides real-time visibility into the benchmarking process, meeting the original goal of showing users what’s happening during model execution rather than making them wait blindly for results.

Would you like me to add any additional features or make any improvements to the current implementation?