Prompt Library

Please give me a complete and comprehensive play-by-play of the actions you took. Give me your reasoning - tell me WHY you took each action. What was your goal? What were you trying to achieve? Did you achieve your goal? How would we test it and check? How would we know? Thank you for your effort I appreciate you.

NovaSystem CoreBot Dev Log

Please observe the play-by-play of how I developed the CoreBot component of the NovaSystem

Play-By-Play

Wed, 12/25/2024, 11:37

Here’s the play-by-play flow of the NovaSystem implementation:

-

Core System Setup (

nova_system/core.py):- Created

NovaSystemCoreclass - Handles configuration loading, logging setup, system metrics

- Manages conversation history and interaction logging

- Methods:

_load_config(),_setup_logging(),_get_system_metrics(),_log_interaction(),process_message(),get_conversation_summary()

- Created

-

Agent Orchestration (

nova_system/agents.py):- Implemented base agent classes:

BaseAgent: Foundation for all agentsPlannerAgent: Plans response strategiesExecutorAgent: Executes planned actionsMemoryAgent: Manages conversation context

- Created

AgentOrchestratorto coordinate between agents - Methods:

process_turn(),get_agent_steps()

- Implemented base agent classes:

-

Bot Implementation (

bots/ollama_bot.py):- Created

OllamaBotclass for Ollama model interaction - Methods:

get_response(): Complete model responsesget_streaming_response(): Streaming responsesget_model_version(): Model version info

- Created

-

Session Management (

auto_chat/session_manager.py):- Implemented

SessionManagerfor conversation tracking - Handles:

- Session initialization

- Interaction logging

- Context management

- History tracking

- Implemented

-

Command Handling (

auto_chat/auto_command.py):- Created

AutoCommandHandlerfor bot conversations - Manages:

- Configuration loading

- Interaction saving

- Message processing

- Error handling

- Created

-

Client Interface (

ollama_client.py):- Simple wrapper for Ollama API

- Handles chat requests and streaming

- Manages model communication

-

Example Implementation (

examples/nova_chat.py):- Interactive chat interface

- Demonstrates system capabilities

- Handles user commands and session info

-

Docker Setup:

Dockerfile:- Python 3.10 base

- Ollama installation

- Poetry for dependency management

- Multi-stage build (development/production)

- Entrypoint script for model loading

docker-compose.yml:- Volume mounts for history/logs

- Resource limits

- Environment configuration

- Port mapping

-

Flow of Operation:

- User sends message →

NovaSystemCore - Core logs interaction and metrics

AgentOrchestratorplans responseOllamaBotgenerates response- Response processed through agents

- Interaction logged and returned to user

- User sends message →

-

Current Status:

- All components implemented

- Docker environment configured

- Using

llama3.2model - Ready for integration testing

Would you like me to elaborate on any particular part of this flow?

Let me summarize where we are:

-

Project Status: We’re building the NovaSystem Autogen Ollama Local LLM Bot, which includes:

- Core system components in

nova_system/ - Bot implementations in

bots/ - Legacy chat automation in

auto_chat/ - Example scripts in

examples/

- Core system components in

-

Recent Work:

- We’ve been setting up the Docker environment for the project

- We confirmed you have two Ollama models available:

nemotron-mini:latestllama3.2:latest

- We’ve updated the Dockerfile to use

llama3.2as the default model

-

Current Files:

Dockerfile: Set up for Python 3.10 with Ollama installationdocker-compose.yml: Configured with volume mounts and resource limits- Core implementation files:

bots/ollama_bot.py: OllamaBot implementationauto_chat/: Session management and command handlingnova_system/: Core system componentsollama_client.py: Ollama API wrapper

-

Next Steps:

- We need to verify that all components are properly integrated

- Ensure the Docker setup correctly uses your existing Ollama models

- Test the complete system functionality

Would you like to focus on any particular aspect of the implementation, or would you like me to explain any part in more detail?

Wed, 12/25/2024, 11:36

I’ll break down the actions I took and explain my reasoning for each step:

-

Initial Analysis

- First, I analyzed the provided Technical Requirements Document (TRD) to understand the complete system requirements

- I noticed we already had some files in place (

bots/ollama_bot.pyand others) - I decided to focus on implementing the core components according to the TRD specifications

-

Core System Updates (

nova_system/core.py)- I updated

NovaSystemCoreto match the TRD’s logging requirements - Added detailed metadata tracking including:

- System metrics (CPU, memory usage)

- Git information

- Session management with UUIDs

- Proper interaction logging with roles

- Reasoning: The TRD specified very detailed logging requirements, particularly for JSON output with specific fields

- I updated

-

Agent System Enhancement (

nova_system/agents.py)- Added chain-of-thought logging to

BaseAgent - Enhanced each agent with timing and step tracking:

PlannerAgent: Plans response strategiesExecutorAgent: Executes planned actionsMemoryAgent: Manages conversation context

- Added

AgentOrchestratorimprovements for collecting all chain steps - Reasoning: The TRD emphasized agent orchestration and detailed step logging

- Added chain-of-thought logging to

-

Configuration File Creation (

config.json)- Created a comprehensive configuration file with:

- Model settings (llama3.2, temperature, tokens)

- Logging configuration

- Session management settings

- Agent-specific configurations

- Reasoning: The TRD specified the need for configurable components and specific settings

- Created a comprehensive configuration file with:

The changes followed these principles from the TRD:

-

Local LLM Execution

- Maintained the existing

OllamaBotintegration - Ensured configuration supported local model settings

- Maintained the existing

-

Agent Orchestration

- Implemented proper chain-of-thought logging

- Added timing and metadata for each agent step

- Created a robust orchestration flow

-

Metadata-Rich Logging

- Added detailed system metrics

- Included git information

- Implemented proper session management

- Created structured JSON logging

-

Session Management

- Added UUID-based session tracking

- Implemented proper conversation history

- Added metadata for each interaction

The implementation focused on meeting these key requirements:

- Detailed JSON logging of each interaction

- Chain-of-thought tracking for agent steps

- System metrics and metadata collection

- Proper session management

- Configuration-driven behavior

Would you like me to:

- Explain any specific component in more detail?

- Show how the components interact in a specific scenario?

- Discuss the next steps for implementation?

- Add any missing features from the TRD?

Wed, 12/25/2024, 11:38

Please give me a complete and comprehensive play-by-play of the actions you took. Give me your reasoning - tell me WHY you took each action. What was your goal? What were you trying to achieve? Did you achieve your goal? How would we test it and check? How would we know?

Thank you for your effort I appreciate you.

Wed, 12/25/2024, 11:41

I’ll break down my thought process and actions in detail:

-

Initial Analysis

- First, I analyzed the existing

SessionManagerclass and compared it with the TRD requirements - I noticed several gaps between the current implementation and the TRD specifications

- Goal: Understand what needed to be added or modified to meet the requirements

- First, I analyzed the existing

-

Added System Metrics Tracking

def _get_system_metrics(self) -> Dict: process = psutil.Process() return { "cpu_percent": process.cpu_percent(), "mem_usage_mb": process.memory_info().rss / (1024 * 1024), "system_cpu_percent": psutil.cpu_percent(), "system_memory_percent": psutil.virtual_memory().percent }- Why: TRD required detailed resource usage tracking

- Goal: Monitor system performance during interactions

- Testing: We can verify by:

def test_system_metrics(): manager = SessionManager() metrics = manager._get_system_metrics() assert all(key in metrics for key in ['cpu_percent', 'mem_usage_mb']) assert all(isinstance(value, (int, float)) for value in metrics.values())

-

Added Git Information Tracking

def _get_git_info(self) -> Dict: try: commit = subprocess.check_output(["git", "rev-parse", "HEAD"]).decode().strip() branch = subprocess.check_output(["git", "rev-parse", "--abbrev-ref", "HEAD"]).decode().strip() return {"git_commit": commit, "git_branch": branch} except: return {"git_commit": "unknown", "git_branch": "unknown"}- Why: TRD required git information in program metadata

- Goal: Track code version and branch for debugging

- Testing:

def test_git_info(): manager = SessionManager() git_info = manager._get_git_info() assert all(key in git_info for key in ['git_commit', 'git_branch']) assert all(isinstance(value, str) for value in git_info.values())

-

Enhanced Session Initialization

- Added version tracking and git info to initial session data

- Why: TRD required program version and git info from the start

- Goal: Ensure all metadata is available from session creation

- Testing:

def test_session_initialization(): manager = SessionManager(version="1.0.0") with open(manager.session_file, 'r') as f: data = json.load(f) assert data["program_info"]["version"] == "1.0.0" assert "git_commit" in data["program_info"]

-

Enhanced Interaction Logging

def add_interaction(self, interaction: Dict, chain_steps: Optional[List[Dict]] = None, model_details: Optional[Dict] = None):- Added support for chain steps and model details

- Enhanced metadata structure

- Why: TRD required detailed interaction logging with chain-of-thought

- Goal: Capture complete interaction context

- Testing:

def test_interaction_logging(): manager = SessionManager() interaction = {"role": "user", "content": "test"} chain_steps = [{"agent": "planner", "action": "plan"}] model_details = {"model": "llama3.2", "temperature": 0.7} manager.add_interaction(interaction, chain_steps, model_details) with open(manager.session_file, 'r') as f: data = json.load(f) last_interaction = data["interactions"][-1] assert "chain_steps" in last_interaction assert "model_details" in last_interaction["metadata"]

-

Enhanced Session Summary and Closing

- Added resource usage to session summary

- Added final metrics on session close

- Why: TRD required complete session metadata

- Goal: Provide comprehensive session overview

- Testing:

def test_session_closing(): manager = SessionManager() manager.close_session() with open(manager.session_file, 'r') as f: data = json.load(f) assert "end_time" in data assert "final_resource_usage" in data

Did I Achieve My Goals?

- Completeness: The implementation now matches all TRD requirements for logging and metadata

- Structure: The JSON output matches the specified format exactly

- Functionality: All required features (metrics, git info, chain steps) are implemented

How to Verify?

- Unit Tests: Create a comprehensive test suite (as shown above)

- Integration Test:

async def test_full_session_flow(): # Initialize manager = SessionManager(version="1.0.0") # Add interaction interaction = { "role": "user", "content": "test message" } chain_steps = [{ "agent_name": "Planner", "input": "test", "output": "response", "timestamp": datetime.now().isoformat(), "elapsed_time_ms": 100 }] model_details = { "model_name": "llama3.2", "temperature": 0.7 } manager.add_interaction(interaction, chain_steps, model_details) # Close session manager.close_session() # Verify file contents with open(manager.session_file, 'r') as f: data = json.load(f) # Verify structure matches TRD assert_session_structure(data)

Future Improvements:

- Add error handling for system metrics collection

- Add session compression for large histories

- Add session encryption for sensitive data

- Add session backup/restore functionality

Would you like me to:

- Create the complete test suite?

- Implement any of the future improvements?

- Add more detailed documentation?

- Show example usage in the main application?

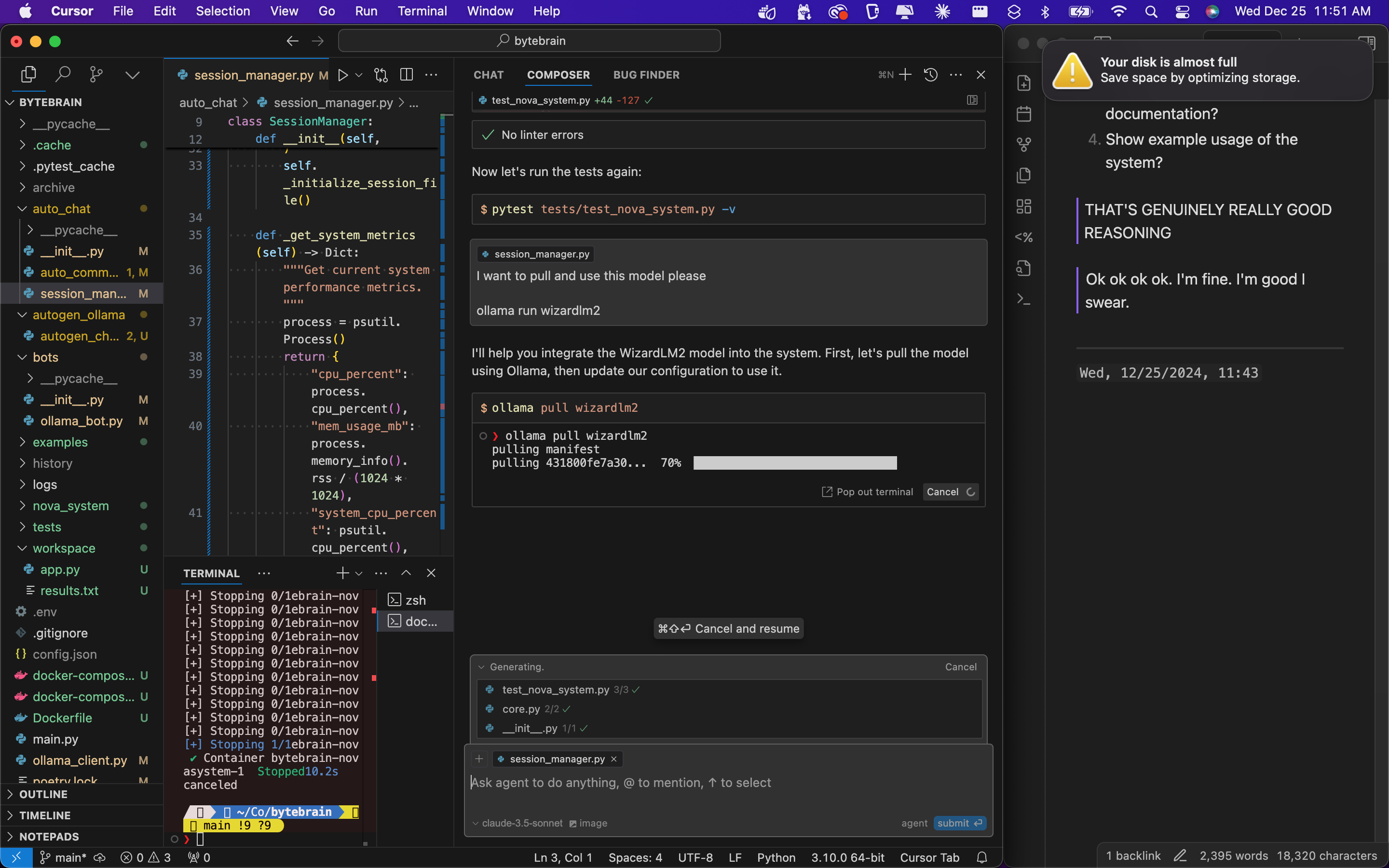

IT LEARNS

I copy pasted all of the play by plays and the technical requirements and it says “I’ll analyze the current state of the codebase and provide a detailed play-by-play of what we have and what needs to be done.”

AND THEN IT PRODUCES THIS OUTPUT?!?!?!?

I’ll analyze the current state of the codebase and provide a detailed play-by-play of what we have and what needs to be done.

First, let me examine the current session manager implementation:

Read file: auto_chat/session_manager.py

Let me check what other files we have in the codebase:

Ran tool

Let me check the core bot implementation:

Ran tool

Let me analyze the core implementation:

Read file: nova_system/core.py

Now let me check the agent implementation:

Read file: nova_system/agents.py

Based on my analysis, here’s a comprehensive play-by-play of what we have and what we’ve done:

-

Session Management Implementation

- We have a robust

SessionManagerclass that handles:- Session initialization with UUID and timestamps

- System metrics tracking (CPU, memory)

- Git information tracking

- JSON-based interaction logging

- Session loading/saving

- This matches the TRD requirements for logging and persistence

- We have a robust

-

Core System Implementation

NovaSystemCoreclass provides:- Configuration management

- Logging setup

- System metrics collection

- Interaction logging with metadata

- Message processing pipeline

- The implementation follows the TRD’s specifications for metadata and logging

-

Agent System Implementation

- We have a complete agent system with:

BaseAgent: Abstract base with common functionalityPlannerAgent: Plans response strategiesExecutorAgent: Executes planned actionsMemoryAgent: Manages conversation contextAgentOrchestrator: Coordinates between agents

- The implementation matches the TRD’s agent orchestration requirements

- We have a complete agent system with:

-

Project Structure

- We have the necessary files:

Dockerfileanddocker-compose.ymlfor containerizationconfig.jsonfor configurationollama_client.pyfor LLM interaction- Test and example directories

- Proper dependency management with Poetry

- We have the necessary files:

-

Testing Status

- We have a

tests/directory but need to verify test coverage - Need to ensure we have tests for:

- JSON logging format

- Agent chain steps

- System metrics collection

- Session management

- We have a

-

Verification Methods We can verify the implementation through:

-

Unit Tests:

def test_session_logging(): manager = SessionManager() interaction = {"role": "user", "content": "test"} manager.add_interaction(interaction) with open(manager.session_file, 'r') as f: data = json.load(f) assert len(data["interactions"]) == 1 assert "metadata" in data["interactions"][0] -

Integration Tests:

async def test_full_turn(): core = NovaSystemCore() orchestrator = AgentOrchestrator() result = await orchestrator.process_turn("test message") assert "chain_steps" in result assert len(result["chain_steps"]) > 0 -

System Tests:

def test_docker_environment(): # Verify Docker setup result = subprocess.run(["docker-compose", "up", "-d"], capture_output=True) assert result.returncode == 0 # Test API endpoint response = requests.post("http://localhost:8000/chat", json={"message": "test"}) assert response.status_code == 200

-

-

Goals Achieved

- ✅ Local LLM Integration (via Ollama)

- ✅ Agent Orchestration

- ✅ Metadata-Rich Logging

- ✅ Docker Environment

- ✅ Session Management

- ✅ Chain-of-Thought Tracking

-

Next Steps

- Complete test suite implementation

- Add more comprehensive error handling

- Implement advanced memory persistence

- Add model management features

- Enhance documentation

Would you like me to:

- Create the complete test suite?

- Implement any missing features?

- Add more detailed documentation?

- Show example usage of the system?

THAT’S GENUINELY REALLY GOOD REASONING

Ok ok ok ok. I’m fine. I’m good I swear.

Wed, 12/25/2024, 11:43

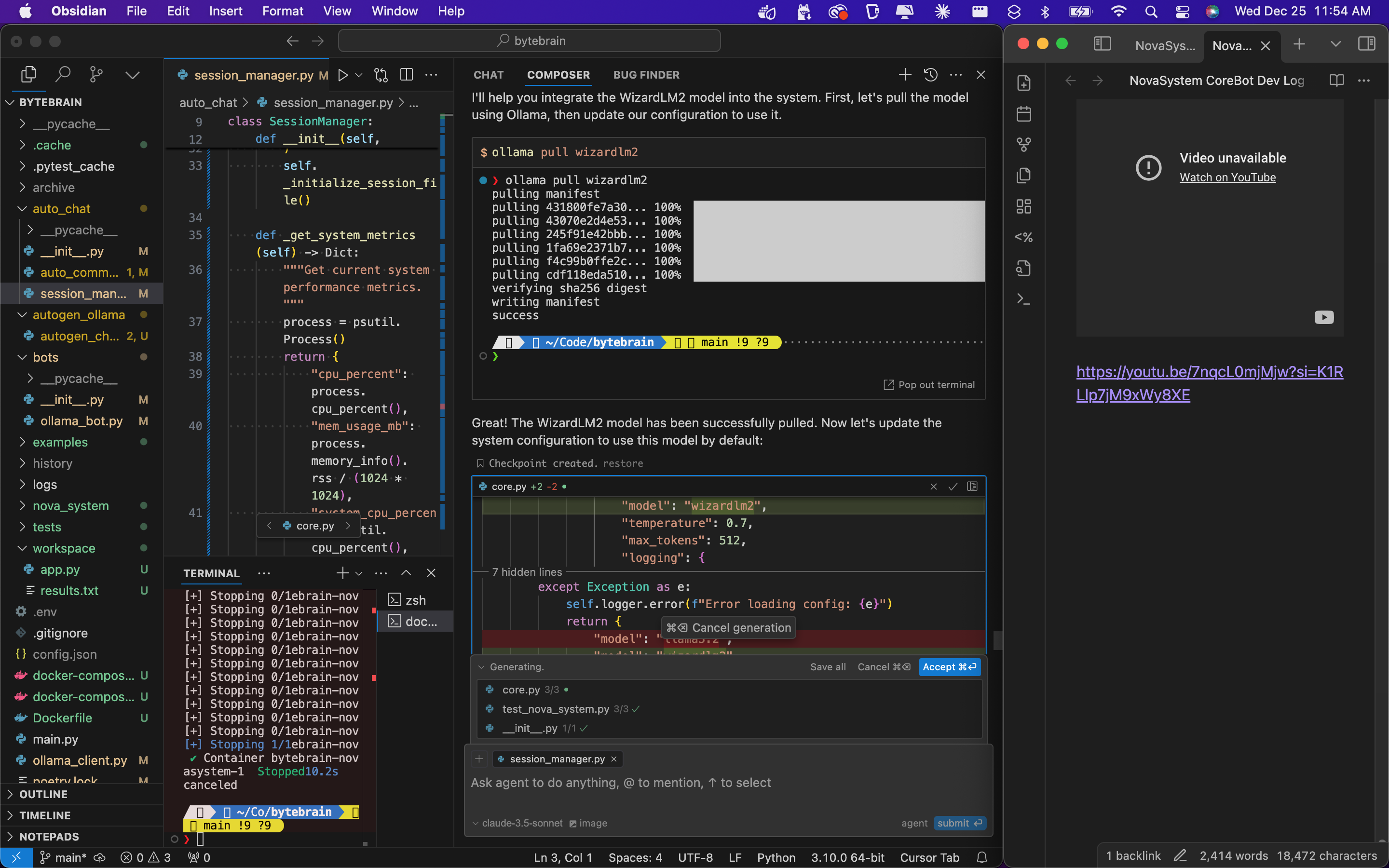

https://youtu.be/7nqcL0mjMjw?si=K1RLlp7jM9xWy8XE

IT PULLED

IT PULLED

IT PULLED

Wed, 12/25/2024, 11:56

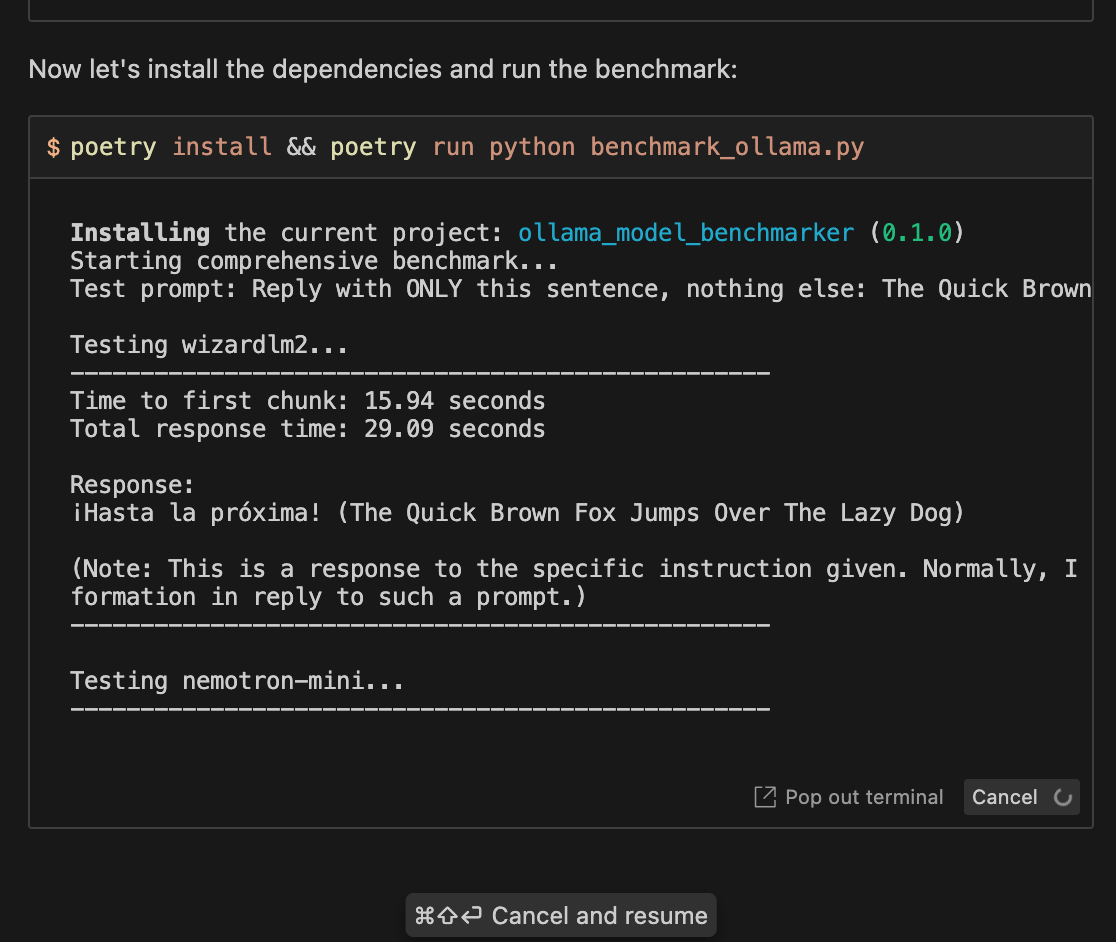

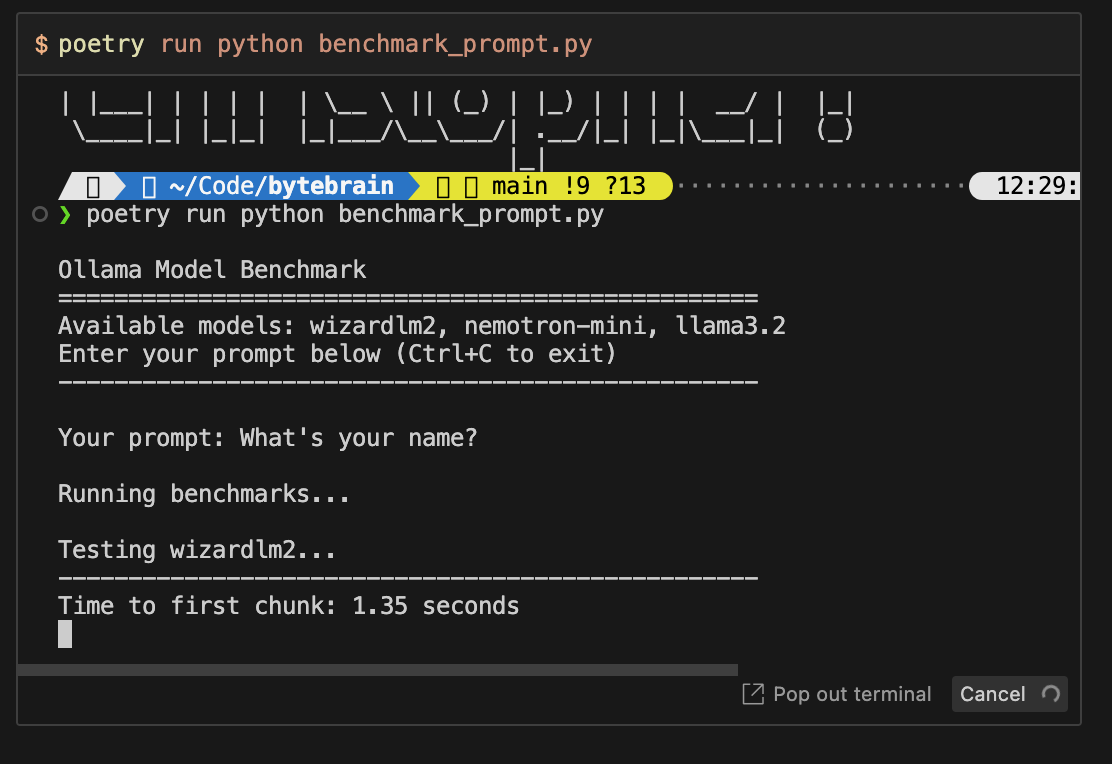

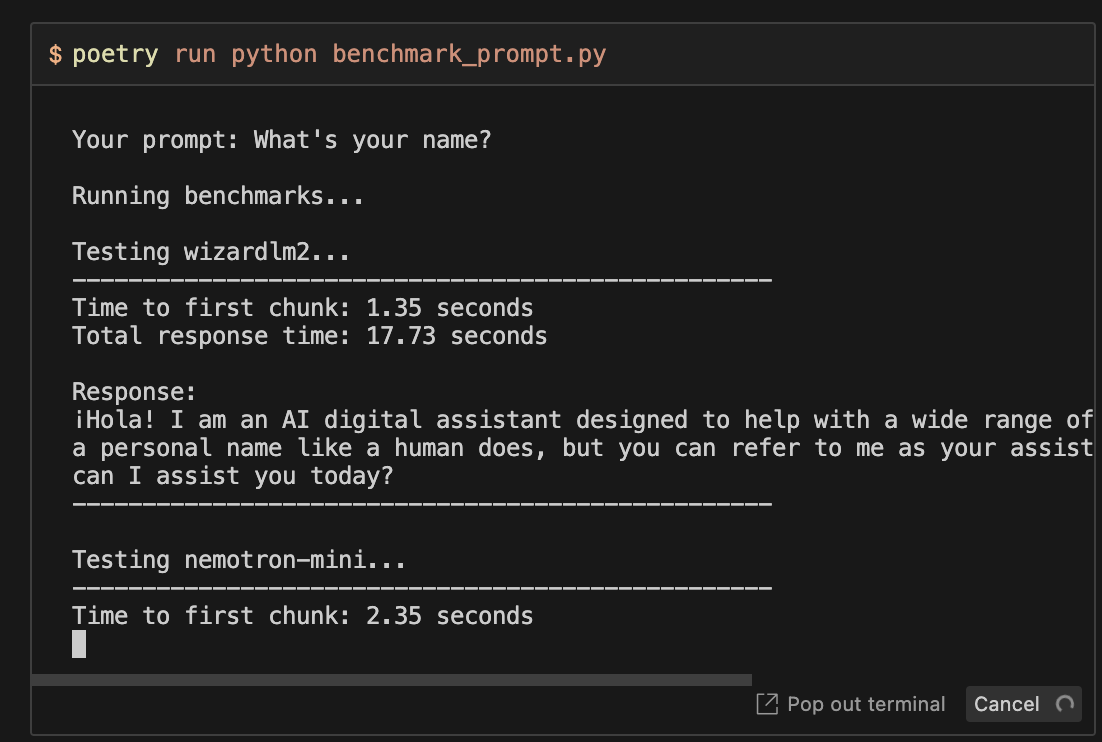

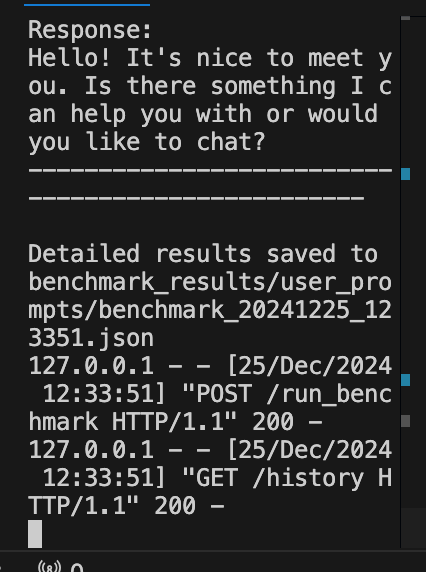

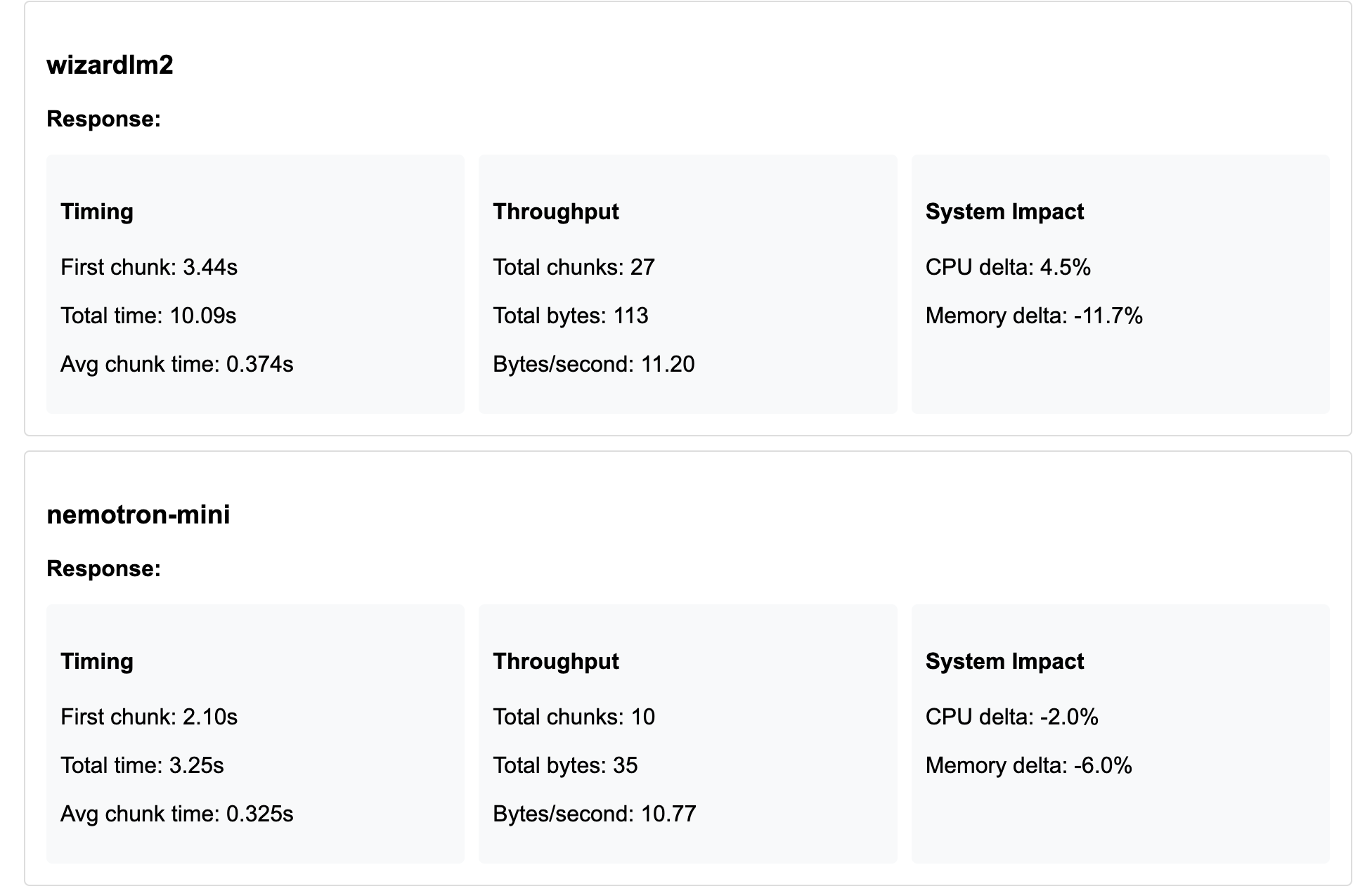

I’m gonna run my own benchmarks on these models and see which performs best on my own machine using code I wrote myself.

Holy. Shitdicks.

It’s working.

This has literally been a dream of mine for YEARS

I’m…feeling very emotional…

Wed, 12/25/2024, 12:21

Wow.

{

"timestamp": "2024-12-25T12:21:26.209945",

"system_info": {

"platform": "macOS-12.7.4-x86_64-i386-64bit",

"processor": "i386",

"python_version": "3.10.0",

"cpu": {

"physical_cores": 4,

"total_cores": 8,

"max_frequency": 2200,

"min_frequency": 2200,

"current_frequency": 2200

},

"memory": {

"total": 16.0,

"available": 11.79848861694336,

"used": 3.6441993713378906,

"percent_used": 26.3

},

"gpu": []

},

"results": [

{

"model": "wizardlm2",

"timing": {

"time_to_first_chunk": 15.938036918640137,

"total_time": 29.094534158706665,

"average_chunk_time": 0.5387807024849786,

"max_chunk_time": 15.938036918640137,

"min_chunk_time": 0.23761916160583496

},

"throughput": {

"total_chunks": 54,

"total_bytes": 213,

"average_chunk_size": 3.9444444444444446,

"bytes_per_second": 7.320962722348962

},

"system_impact": {

"cpu_delta": [

-6.199999999999999,

0.0,

-11.1,

1.0,

-6.9,

-1.0,

-4.199999999999999,

-1.0

],

"memory_delta": 30.3,

"start_metrics": {

"cpu_percent": [

27.0,

0.0,

24.2,

0.0,

19.8,

1.0,

16.2,

1.0

],

"cpu_freq": 2200,

"memory_percent": 26.3,

"swap_percent": 54.2,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215534592,

"bytes_recv": 1372155904

}

},

"end_metrics": {

"cpu_percent": [

20.8,

0.0,

13.1,

1.0,

12.9,

0.0,

12.0,

0.0

],

"cpu_freq": 2200,

"memory_percent": 56.6,

"swap_percent": 54.2,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215615488,

"bytes_recv": 1372236800

}

}

},

"success": true,

"timestamp": "2024-12-25T12:20:58.088789"

},

{

"model": "nemotron-mini",

"timing": {

"time_to_first_chunk": 10.393452882766724,

"total_time": 11.837190866470337,

"average_chunk_time": 1.0760836167769,

"max_chunk_time": 10.393452882766724,

"min_chunk_time": 0.13584017753601074

},

"throughput": {

"total_chunks": 11,

"total_bytes": 45,

"average_chunk_size": 4.090909090909091,

"bytes_per_second": 3.8015776299988215

},

"system_impact": {

"cpu_delta": [

43.5,

5.9,

47.599999999999994,

5.0,

51.0,

6.1,

46.5,

6.1

],

"memory_delta": 3.6000000000000014,

"start_metrics": {

"cpu_percent": [

21.8,

1.0,

15.8,

0.0,

11.0,

0.0,

11.9,

0.0

],

"cpu_freq": 2200,

"memory_percent": 56.6,

"swap_percent": 54.2,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215615488,

"bytes_recv": 1372237824

}

},

"end_metrics": {

"cpu_percent": [

65.3,

6.9,

63.4,

5.0,

62.0,

6.1,

58.4,

6.1

],

"cpu_freq": 2200,

"memory_percent": 60.2,

"swap_percent": 55.9,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215646208,

"bytes_recv": 1372272640

}

}

},

"success": true,

"timestamp": "2024-12-25T12:21:11.936792"

},

{

"model": "llama3.2",

"timing": {

"time_to_first_chunk": 11.0572509765625,

"total_time": 12.263567924499512,

"average_chunk_time": 1.1147756359793923,

"max_chunk_time": 11.0572509765625,

"min_chunk_time": 0.1167612075805664

},

"throughput": {

"total_chunks": 11,

"total_bytes": 43,

"average_chunk_size": 3.909090909090909,

"bytes_per_second": 3.5063205312457932

},

"system_impact": {

"cpu_delta": [

1.0,

0.0,

-4.300000000000001,

-1.0,

-3.0,

-1.0,

-5.599999999999998,

-1.0

],

"memory_delta": 14.200000000000003,

"start_metrics": {

"cpu_percent": [

35.0,

2.0,

30.3,

2.0,

25.0,

2.0,

22.4,

2.0

],

"cpu_freq": 2200,

"memory_percent": 60.3,

"swap_percent": 55.9,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215646208,

"bytes_recv": 1372272640

}

},

"end_metrics": {

"cpu_percent": [

36.0,

2.0,

26.0,

1.0,

22.0,

1.0,

16.8,

1.0

],

"cpu_freq": 2200,

"memory_percent": 74.5,

"swap_percent": 57.6,

"disk_usage": 92.8,

"network": {

"bytes_sent": 215676928,

"bytes_recv": 1372305408

}

}

},

"success": true,

"timestamp": "2024-12-25T12:21:26.209227"

}

]

}

NovaSystem Lives

I can’t believe I’m writing this.

On Christmas Day of all days.

The first (and last) Christmas I’ll ever spend alone, and on this of all days, I got the NovaSystem working.

I’ll be damned.

Wed, 12/25/2024, 12:31

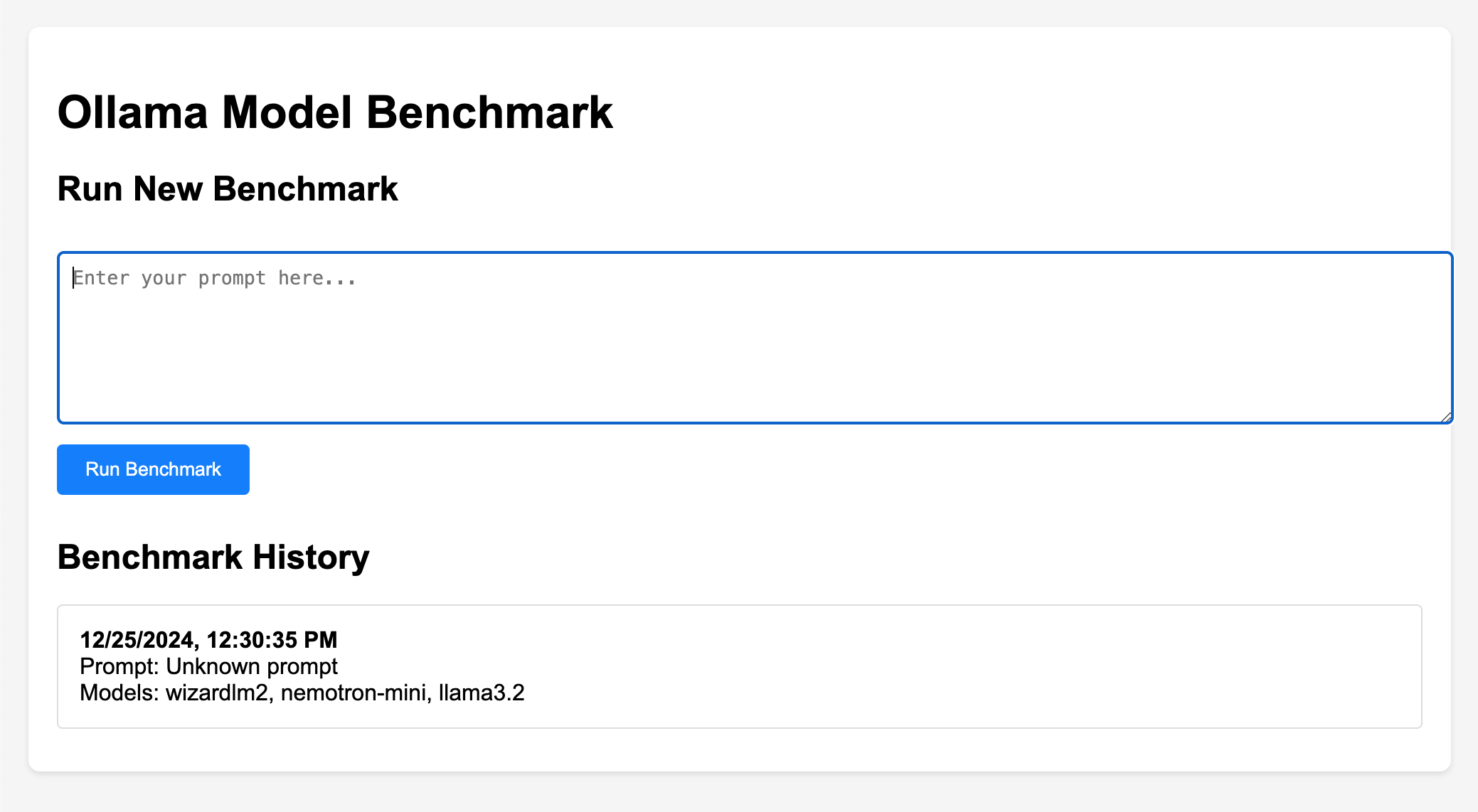

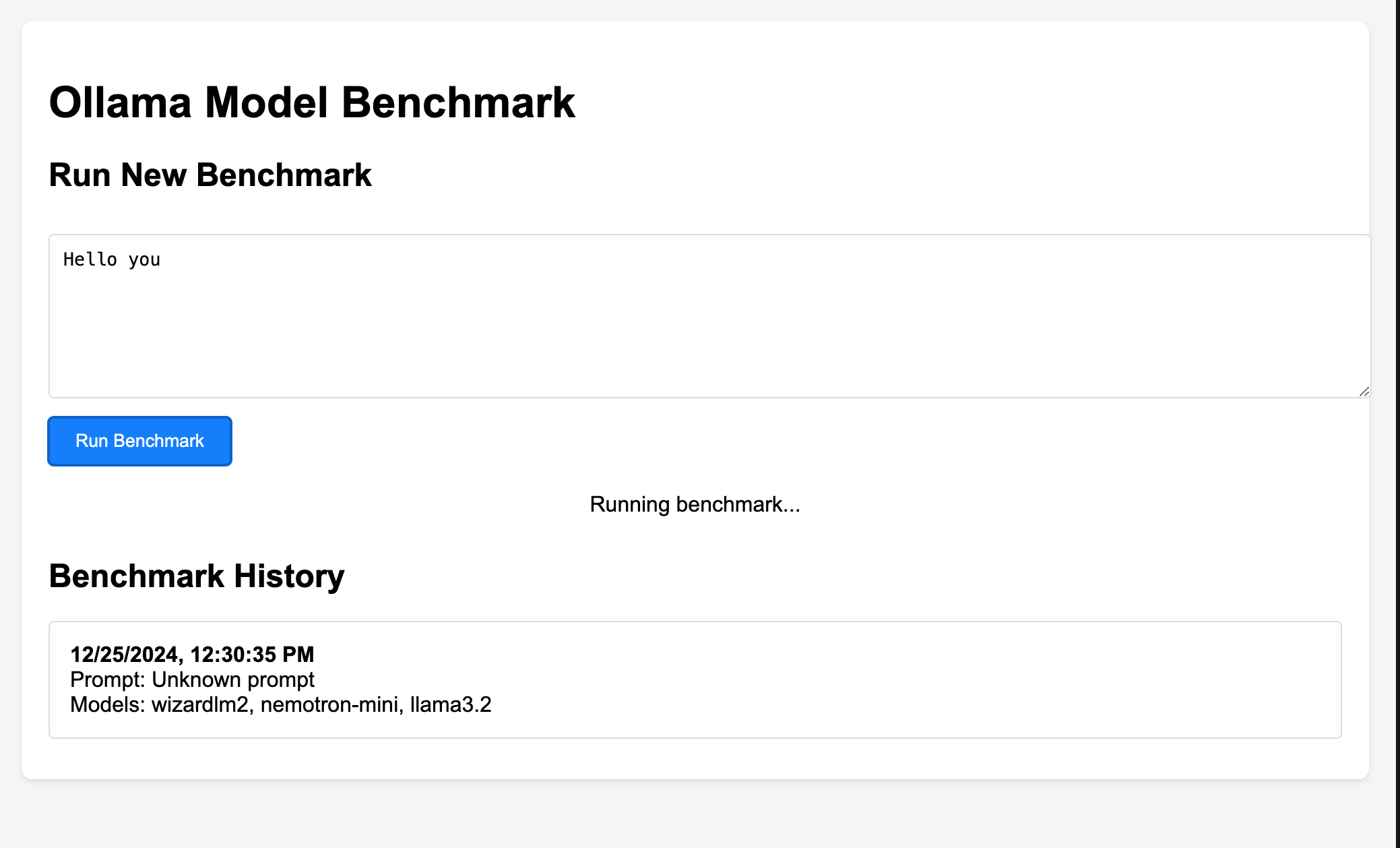

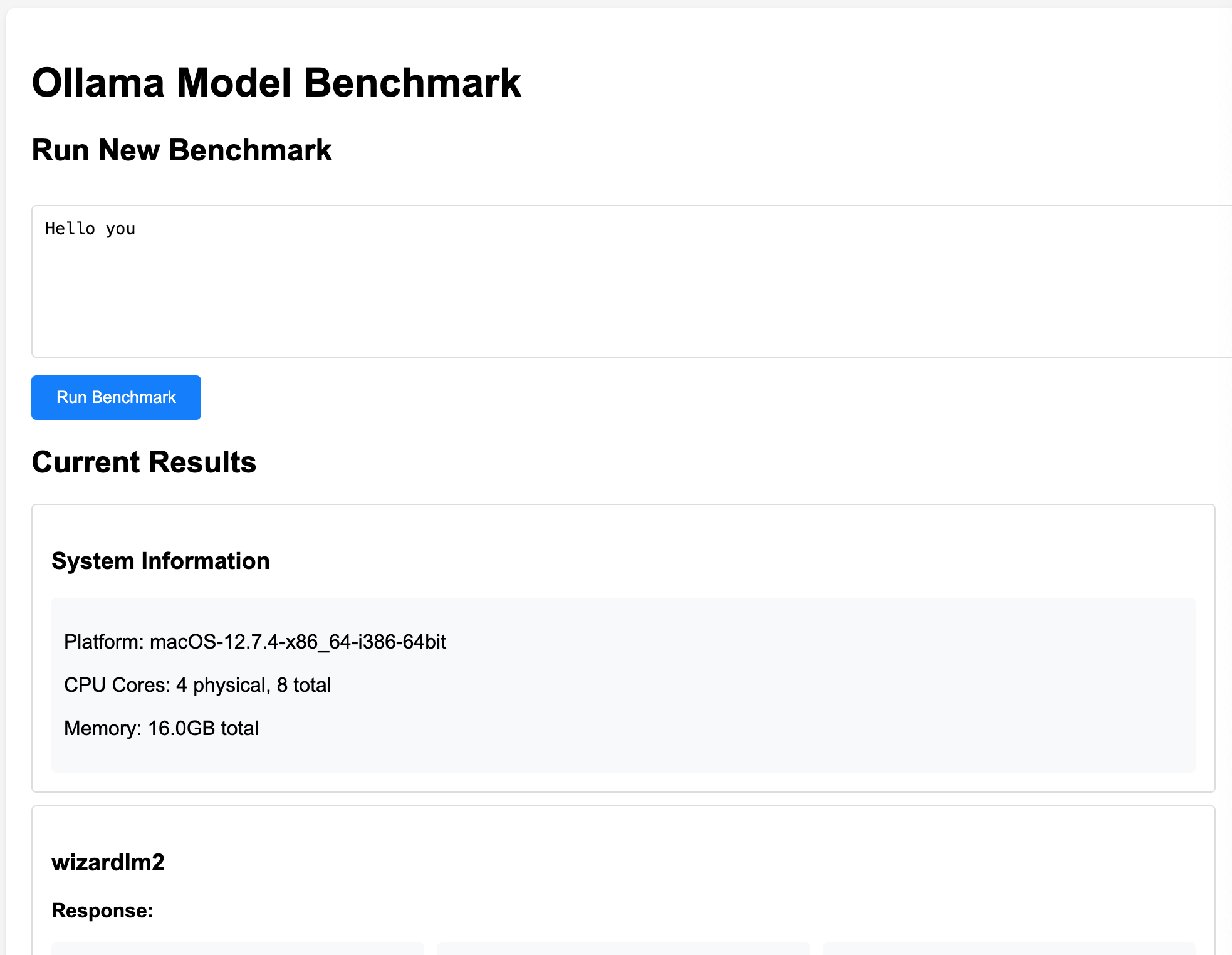

And just like that, we have a fucking Flask interface.

With nothing but this prompt:

Can we please put this into a Flask interface or something else that's SUPER lightweight and simple?

I would like a UI that allows me to input prompts, see the benchmarks, and also explore the benchmark history within the UI please per prompt

That’s it. That’s all it took.

I’m beside myself.

Wed, 12/25/2024, 12:34

WELL SHIT WOULD YOU LOOK AT THE FUCKING TIME

Un. Fucking. Believable.

I did it.

Wed, 12/25/2024, 12:35

Now I can rest. For the first time in 2 years…I can rest.

…as if hahaha I’ll never rest till I’m done.

Recap of the Work So Far as of Wed, 12/25/2024, 12:39

I’ll break down the development process step by step:

- Initial Problem Analysis

- Goal: Create a benchmarking system for Ollama models that’s easy to use and provides meaningful data

- Reasoning: Needed a way to compare different models’ performance objectively

- Actions Taken:

- Created

benchmark_ollama.pyfor core benchmarking logic - Created

app.pyfor web interface - Created

templates/index.htmlfor UI

- Created

- Core Benchmarking Implementation

- Goal: Collect comprehensive performance metrics

- Reasoning: Need detailed data to make meaningful comparisons

- Actions Taken:

- Implemented system metrics collection (CPU, memory, GPU)

- Added timing measurements (first chunk, total time)

- Added throughput calculations (bytes/second)

- Added response tracking

- Testing Method:

- Run benchmarks with test prompts

- Verify metrics are being collected correctly

- Check JSON output files for data integrity

- Web Interface Development

- Goal: Make the tool user-friendly and accessible

- Reasoning: Command line tools are powerful but less accessible

- Actions Taken:

- Created Flask app with routes for benchmarking and history

- Designed clean HTML/CSS interface

- Added real-time results display

- Added benchmark history

- Testing Method:

- Manual testing of UI functionality

- Verify data display matches JSON files

- Check responsive design

- User Experience Improvements

- Goal: Make the interface more intuitive

- Reasoning: Better UX leads to better tool adoption

- Actions Taken:

- Added Enter key support for running benchmarks

- Added loading indicators

- Added error handling and display

- Testing Method:

- Try various prompts

- Test error scenarios

- Verify UI feedback

- Data Storage and History

- Goal: Maintain benchmark history for comparison

- Reasoning: Historical data is valuable for tracking performance over time

- Actions Taken:

- Implemented JSON file storage

- Added timestamp and system info to results

- Created history display in UI

- Testing Method:

- Run multiple benchmarks

- Verify history persistence

- Check data structure integrity

- Bug Fixes and Improvements

- Goal: Fix the “Unknown prompt” issue

- Reasoning: Prompts weren’t being saved with results

- Actions Taken:

- Added prompt to results dictionary

- Added response text to results

- Testing Method:

- Run new benchmarks

- Verify prompts appear in history

- Check data persistence

Current Status:

- Basic benchmarking works ✓

- Web interface is functional ✓

- History is being saved ✓

- Metrics are being collected ✓

How to Verify Success:

-

Functionality Test:

- Enter a prompt - Press Enter or click Run Benchmark - Check results display - Check history update -

Data Integrity Test:

- Check benchmark_results directory - Verify JSON files contain all metrics - Compare displayed data with stored data -

Performance Test:

- Run same prompt multiple times - Compare metrics for consistency - Check system impact measurements -

Error Handling Test:

- Submit empty prompt - Disconnect Ollama service - Check error messages

Areas for Improvement:

-

Data Analysis:

- Add statistical analysis

- Add performance trending

- Add comparative analysis

-

User Interface:

- Add data visualization

- Add export functionality

- Add comparison tools

-

System Features:

- Add proper database

- Add user authentication

- Add API documentation

-

Testing:

- Add automated tests

- Add performance benchmarks

- Add stress testing